Christchurch call − pseudo-counter-terrorism at the cost of human rights?

The Prime Minister of New Zealand Jacinda Arden showed compassionate and empathetic leadership in her response to the Christchurch terrorist attack on a mosque in her country on 15 March 2019. On 16 May in Paris, Arden and the French President Emmanuel Macron co-launched the Christchurch Call to Action to Eliminate Terrorist and Violent Extremist Content Online.

The day before, EDRi joined a meeting the New Zealand government held with civil society and academics. The purpose of the meeting was to present the call and to hear recommendations moving forward on the call implementation and joint work to combat terrorism and white supremacy.

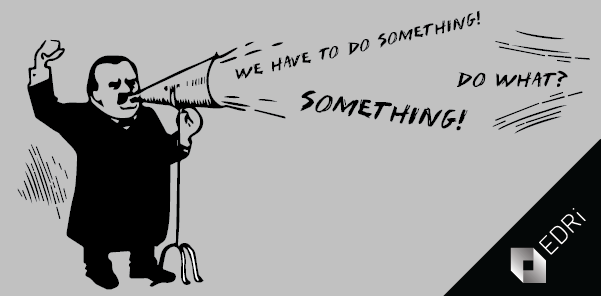

While the approach of the New Zealand government is sensible, and the final text of the call to action does include human rights safeguards for a free and open internet, the initiative is naïve as it relies on questionable companies and governments’ practices, inefficient in combating terrorism, and opens the door to serious human rights breaches.

A “sacrificed process”

In the words of Arden herself, civil society consultations were “sacrificed” to allow for a swift process and for the call to be launched, on the occasion of the Tech for Good conference and the G7 Digital Ministers meeting. NGOs and other stakeholders such as journalists, academics and the technical community did not get a chance to submit contributions before the finalisation of the call. The rushed timeline was an obstacle to any meaningful participation in the process. The lack of anti-racism organisations or organisations from the Global South in the consultative meeting in Paris is a major gap for an initiative purporting to address “violent extremism” globally.

Failure to address social media business model

The call to action refrains from criticising and questioning the business model of Google, Amazon, Facebook, and Apple in order to get them to sign the initiative. However, as long as profit is made mainly from behavioural advertising revenue which increases by showing polarising, violent or illegal content, the entire system will continue to promote such content and lead people to share it. Human nature and all of its addictions are encouraged and amplified by opaque artificial intelligence.

Human rights concerns

As state authorities are unable to call out the big tech for larger issues, the Christchurch call places emphasis on content removal and filtering of broadly and ill-defined content. “Terrorist and violent extremist” content can be left to the appreciation of law enforcement authorities and companies, which opens risks of arbitrariness against legitimate dissent from groups at risk of racism, human rights defenders, civil society organisations or political activists. Solutions such as upload filters or rapid removals of content can be turned into censorship and are error-prone, as the European Commission acknowledges, by stating that “biases and inherent errors and discrimination can lead to erroneous decisions”. Invaluable and unique evidence of human rights abuses committed by groups or governments can also disappear, as examples from the war in Syria show. UN Special Rapporteur on human rights and counter-terrorism estimates that around 67% of people affected by counter-terrorism or security policies are human rights defenders.

Handing over policing powers and regulating freedom of expression to the private sector, with no accountability or possibility of redress, is highly problematic for the rule of law. Companies’ terms of services do not replace laws when it comes to assessing what is legal and what is not. In addition to this problem, there should be redress mechanisms to review whether in fact only illegal terrorist content has been removed − otherwise human rights will be at risk in countries that do not have the same respect for the rule of law than New Zealand.

Algorithms used to prevent uploading or delete content are not transparent, and do not allow for accountability or redress mechanisms. Therefore unaccountable removal of content and incentives for over-removal of content must be explicitly rejected. Likewise, law enforcement authorities must be held accountable by being obliged to submit transparency reports regarding the requests to remove content, including the number of investigations and criminal cases opened as a result of these requests. There are many initiatives addressing the broad range of “harmful online content” such as the upcoming G7 Biarritz Summit, France and the UK’s online harms/platform duties proposals and the EU Regulation on Terrorist Content Online. The overall impact of initiatives that risk limiting freedom of expression needs to be evaluated based on evidence. This is currently not the case.

Broader societal efforts are needed to effectively combat terrorism – online and offline. These include education, social inclusion, questioning the impact of austerity, accountability for politicians using hate speech and stigmatising rhetoric, and real community involvement.

What the YouTube and Facebook statistics aren’t telling us (24.04.219)

https://edri.org/what-the-youtube-and-facebook-statistics-arent-telling-us/

Commission working document – Impact assessment accompanying the Proposal for a Regulation of the European Parliament and of the Council on preventing the dissemination of terrorist content online (12.09.2018)

https://ec.europa.eu/commission/sites/beta-political/files/soteu2018-preventing-terrorist-content-online-swd-408_en.pdf

Report of the Special Rapporteur on the promotion and protection of human rights and fundamental freedoms while countering terrorism on the role of measures to address terrorism and violent extremism on closing civic space and violating the rights of civil society actors and human rights defenders (18.02.2019)

https://www.ohchr.org/Documents/Issues/Terrorism/SR/A_HRC_40_52_EN.pdf

(Contribution by Claire Fernandez, EDRi)