Electronic Frontier Norway (EFN) reports “Shinigami Eyes” to the Norwegian DPA for violation of GDPR

EDRi member Electronic Frontier Norway (EFN) found that the use of the program “Shinigami Eyes” and the operation of the database it uses constitute multiple violations of the GDPR and its Norwegian implementation. The most egregious of these being the clear violation of Article 9 which prohibits the registrations of people’s political views, philosophical convictions and physical persons sexual relations or sexual orientations etc.

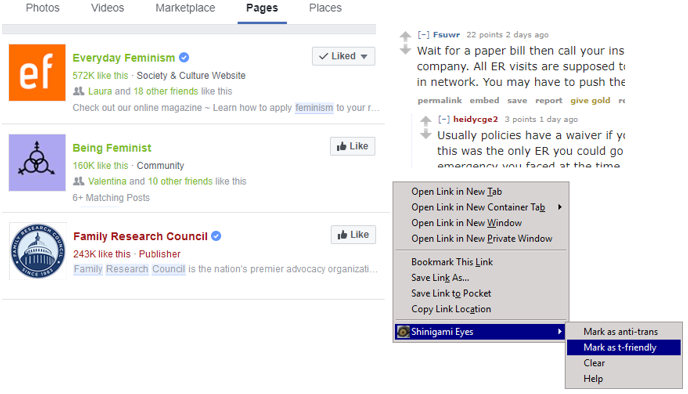

Screenshot from the browser plugin Shinigami Eyes showing how a name, in this case “Family Research Council” can be marked as “trans friendly”. The image was found in the documentation for Shinigami Eyes that is available in the github repository.

On 21 February, a radio program on national Norwegian radio reported some concerns about the browser-plugin “Shinigami Eyes” in the program “Norsken, Svensken og Dansken”. The program’s host Hilde Sandvik described the program like this (translation by EFN):

“… I downloaded this plugin for the chrome web browser, and that means I can flag you as trans-hostile if I believe you have voiced an opinion in this discussion” [1]

The “flagging” is shown by names being colour coded in the browser to indicate if the named entity is a “transphobe” or “trans-friendly”.

Electronic Frontier Norway (EFN) has asked the DPA to consider the legality of this tool, and also which measures can be taken towards the author or publisher used to distribute the plugin.

Shinigami Eye is available from the app stores of Chrome, Google and Firefox. [2 a, b] EFN has downloaded the plugin’s source code from the public source code repository “Github”. [3] Through analysis of the source code we found the following:

- The classification of people and organisations into friendly or phobic is uploaded to a server running in the US hosted by Amazon.

- The uploaded classifications seem to pass through some form of editorial function before they are added as upgrades to the plugin.

- To identify persons as transphobic or trans-friendly, a technology called “Bloom filters” is used. The technology does not store a list of names, but instead uses “hash functions” that when asked will classify a name as one or the other. The technology does open up for wrongful classifications due to its statistical nature.

It is problematic that the data is stored in a server in the USA, and there does not seem to be any kind of data processing agreement in place regulating this use, much less one that mitigates the concerns raised by the Schrems-II ruling in the EU court of justice. Finally, the governance process for databases containing personal data is opaque. It is unknown who operates the service, what the editorial process constitutes, who to contact to get copies of the data stored about oneself, and protocols and procedures for demanding being left out of the database entirely.

EFN finds that the use of the program and the operation of the database it uses to likely constitutes multiple violations of the GDPR and its Norwegian implementation. The most egregious of these being the clear violation of Article 9 which prohibits the registrations of people’s political views, philosophical convictions and physical persons sexual relations or sexual orientations etc.

We may assume that the browser plugin was written with the best intentions, but there are still multiple concerns that can put persons in danger. Some of these are: Trans People can be marked as “trans-friendly” without their consent. The software can be used to identify targets for online harassment, doxing, cyberstalking and even physical attacks. If the program is indeed used to identify people as targets for hate speech, then the program will be used as a tool to commit criminal acts in violation of the Norwegian penal code (“Straffeloven”) §185 prohibiting hate speech.

It is unknown if the program was written with good or ill intentions. The program could be written by trans-friendly people wanting to know who is on their side when encountering them online, or it could be made by transphobic people who use it to identify and target their opponents. The absence of information about the program and the operation of the underlying database means that we simply do not know.