CSA Regulation Document Pool

This document pool contains updates and resources on the EU's proposed 'Regulation laying down rules to prevent and combat child sexual abuse' (CSA Regulation)

Content warning: this page and these resources contain discussions of child sexual abuse and exploitation

In this document pool we list articles, documents, updates and news about the proposed EU ‘Regulation laying down rules to prevent and combat child sexual abuse‘ (2022/0155(COD)) (2022), which we refer to as the ‘CSA Regulation’ or ‘CSAR’. We approach this legislative proposal from a digital human rights perspective, including by analysing issues of mass surveillance, upload filters & online anonymity.

Contents

- Key legislative information including the CSAR text & annexes

- Latest news from EDRi

- EDRi position on the CSA Regulation

- Civil society demands representing 134 NGOs

- Official opinions including EDPS/EDPB, legal services & complementary impact assessment

- European Parliament position including stakeholders, reports & key votes

- EU Council position including compromise texts & key votes

- Interim ePrivacy derogation information and archive

- Terminology

- Contact us

Privacy and safety are mutually enforcing rights. People’s ability to communicate without unjustified intrusion - whether online or offline - is vital for their rights and freedoms, as well as for the development of vibrant and secure communities and society

1. Key legislative information

Here you will find key details about the CSA Regulation, including information about the responsible EU institutions, as well as the official legislative text and accompanying documents.

- The full name of the legislative file is the ‘Regulation of the European Parliament and of the Council laying down rules to prevent and combat child sexual abuse’ and its reference is 2022/0155(COD);

- It is often referred to as the ‘CSA Regulation’, ‘CSAR’ or ‘CSAM proposal’. It has also been coined ‘Chat Control’ by its critics;

- It is lex specialis to the EU’s Digital Services Act, meaning that it builds on and particularises certain parts of the DSA (those relevant to tackling child sexual abuse material online);

- The legal basis for the Regulation is Article 114 of the Treaty on the Functioning of the European Union (TFEU), which provides for the functioning of the EU Internal Market.

- Access the official annexes and Commission impact assessment by scrolling down to ‘Commission adoption’ at the following link, and downloading the relevant documents.

- The file is a proposal for a Regulation, meaning that it not (yet) a piece of EU legislation, and therefore its rules do not apply (yet);

- The file is currently being negotiated within the European Parliament and the Council of the EU (member states). As of the beginning of October 2023, neither institution has agreed their official position;

- Originally scheduled for October 2021 and subsequently delayed several times, the file was eventually ‘adopted’ (proposed) by the European Commission on 11 May 2022;

- The lead European Commissioner is the Commissioner for Home Affairs (2019-2024), Ylva Johansson.

- The lead European Commission cabinet is DG HOME (Directorate-General for Migration and Home Affairs);

- DG HOME have published information and communications including a Q&A, factsheet and public campaign.

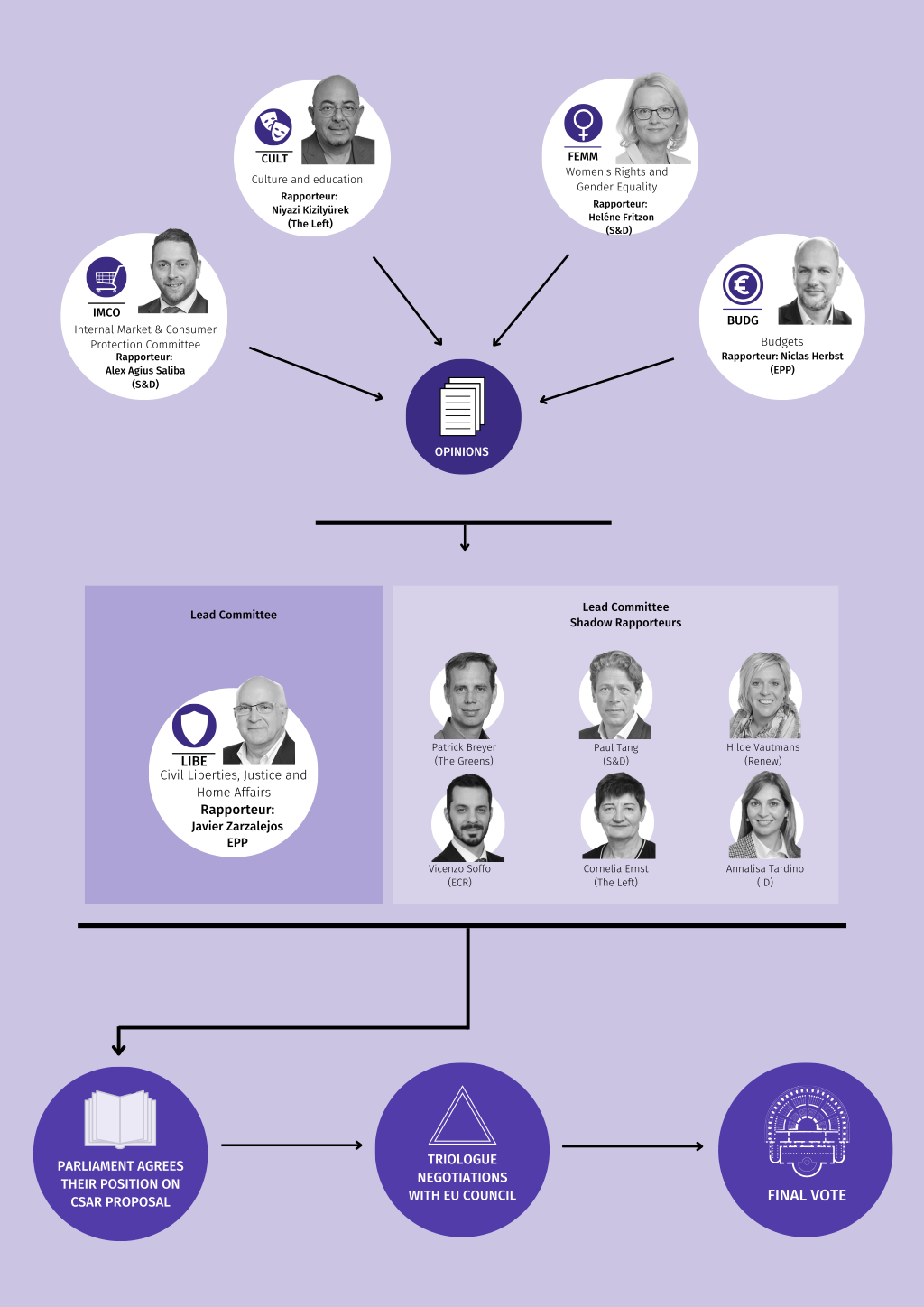

- The lead committee in the European Parliament is the committee on Civil Liberties, Justice and Home Affairs (LIBE);

- The Internal Market and Consumer Protection (IMCO) committee is an Associated Committee;

- Three committees are opinion-giving committees: the Women’s Rights and Gender Equality (FEMM) committee, the Culture and Education (CULT) committee and the Budgets (BUDG) committee;

- More information is available in the Parliament’s Legislative Observatory page for the file.

More resources, including detailed information about the responsible MEPs, reports and amendments, and dates of key votes, are available in section 6.

- The Council Configuration is ‘Justice and Home Affairs’ (JHA) (Coreper II);

- The responsible working group is the Law Enforcement Working Party (LEWP);

- Many of the Council’s documents are routinely published in the public register.

More resources, including detailed information about the Council negotiations, relevant presidency compromise documents, and dates of key votes, are available in section 7.

- The CSA Regulation was put forward alongside a complementary but distinct strategy, called ‘Better internet for Kids‘, or BiK+

- It aims “to protect and empower children in the online world”

- The European Parliament’s Culture and Education (CULT) committee is in the process of developing a Resolution on the strategy

- The CSA Regulation is intended to replace Regulation (EU) 2021/1232, the ‘interim ePrivacy derogation’. The derogation expires on 3 August 2024, but the European Commission has confirmed [link in DE] that if necessary to avoid a legislative gap, it can be extended;

- A new European Parliament will be elected in June 2024 and a new European Commission will be appointed shortly after. If the CSA Regulation has not been fully adopted before the elections, the legislative process will automatically continue afterwards if there is a prior first reading position. If not, the Parliament’s position will lapse, and the Conference of Presidents must decide whether or not to continue the unfinished business (rule 240).

In September 2023, an investigation by journalists published in seven media outlets across Europe raised allegations of a conflict of interest from Commissioner Johansson and DG HOME staff. These allegations include facilitating access to a company with commercial interests in the file, Thorn, and have led to questions about the legitimacy and ethics of the Commission’s process in the preparation of the CSA Regulation:

- Original story in Balkan Insight (in English): https://balkaninsight.com/2023/09/25/who-benefits-inside-the-eus-fight-over-scanning-for-child-sex-content/

- Zeit (in German): https://www.zeit.de/digital/datenschutz/2023-09/chatkontrolle-eu-ashton-kutcher-thorn

- Le Monde (in French): https://www.lemonde.fr/article-offert/dxvzicdekfra-6190911/pedopornographie-en-ligne-bataille-d-influence-autour-d-un-texte-europeen-controverse

- De Groene Amsterdammer (in Dutch): https://www.groene.nl/artikel/wie-heeft-hier-baat-bij-niet-de-kinderen

- El Diario (in Spanish): https://www.eldiario.es/tecnologia/conflictos-intereses-lucha-ue-pornografia-infantil-internet_1_10535781.html

- IRPI Media (in Italian): https://irpimedia.irpi.eu/sorveglianze-pericoli-nascosti-regolamento-anti-pedopornografia/

- Solomon (in Greek): https://wearesolomon.com/el/mag/thematikh/logodosia-diafaneia/poios-ofeleitai-apo-ton-agona-tis-ee-kata-tis-paidikis-sexoualikis-kakopoihshs/

- Additional reporting detailing Europol’s request for the CSA Regulation to be expanded in future to allow scanning for other types od content: https://balkaninsight.com/2023/09/29/europol-sought-unlimited-data-access-in-online-child-sexual-abuse-regulation/

- Netzpolitik (in German): https://netzpolitik.org/2023/interne-dokumente-europol-will-chatkontrolle-daten-unbegrenzt-sammeln/

The European Parliament’s Civil Liberties (LIBE) committee wrote to the Commissioner to request an explanation, and subsequently received a response from Thorn and from Commissioner Johansson:

- LIBE coordinators letter: https://cloud.edri.org/index.php/s/PMMTGWXXQiom8GZ

- Response from Thorn: https://netzpolitik.org/wp-upload/2023/10/2023-10-02_Thorn_LIBE_Letter.pdf

- Response from Commissioner Johansson: https://cloud.edri.org/index.php/s/TrEykTHYA2Cd4d8

2. Latest news

Read EDRi’s latest blogs about the CSA Regulation, including legislative developments and key digital human rights concerns.

-

Press Release (CSA Regulation): Who benefits from the EU Commission’s mass surveillance law?

A newly-published independent investigation uncovered that the European Commission has been promoting industry interests in its proposed law to regulate the spread of child sexual abuse material online.

Read more

-

Is this the most criticised draft EU law of all time?

An unprecedentedly broad range of stakeholders have raised concerns that despite its important aims, the measures proposed in the draft EU Child Sexual Abuse Regulation are fundamentally incompatible with human rights.

Read more

-

Open letter: Hundreds of scientists warn against EU’s proposed CSA Regulation

Over 300 security researchers & academics warn against the measures in the EU's proposed Child Sexual Abuse Regulation (CSAR), citing harmful side-effects of large-scale scanning of online communications which would have a chilling effect on society and negatively affect democracies. The letter remains open for signatures.

Read more

3. EDRi position on the CSA Regulation

Download EDRi’s position paper on the CSA Regulation, in both the full version and as a short summary booklet. You can also find our ten principles to defend children’s rights in the digital age.

- (🇬🇧 English) ‘EDRi’s 10 principles to defend children in the digital age ‘ (09.02.2022)

- (🇩🇪 German) ‘Prinzipien von EDRi für Ausnahmen von der Datenschutzrichtlinie für elektronische Kommunikation zum Zweck der Entdeckung von online verbreiteten Darstellungen des sexuellen Missbrauchs von Kindern (CSAM)‘ (09.02.2022)

4. Civil society demands

The EDRi network and our partners are part of a 134-strong coalition of NGOs warning that the risks of mass surveillance, online censorship, discrimination and digital exclusion are so severe, that the EU’s proposed CSA Regulation should be rejected.

-

Open letter: EU countries should say no to the CSAR mass surveillance proposal

Today, EDRi and 81 organisations have sent an open letter to EU governments to once again urge them to say no to the CSA Regulation until it fully protects online rights, freedoms, and security.

Read more

-

European Commission must uphold privacy, security and free expression by withdrawing new law, say civil society

In May, the European Commission proposed a new law: the CSA Regulation. If passed, this law would turn the internet into a space that is dangerous for everyone’s privacy, security and free expression. EDRi is one of 134 organisations calling instead for tailored, effective, rights-compliant and technically-feasible alternatives to tackle this grave issue.

Read more

-

Open letter: Protecting digital rights and freedoms in the Legislation to effectively tackle child abuse

EDRi is one of 52 civil society organisations jointly raising our voices to the European Commission to demand that the proposed EU Regulation on child sexual abuse complies with EU fundamental rights and freedoms. You can still add your voice now!

Read more

-

Chat control: 10 principles to defend children in the digital age

The automated scanning of everyone’s private communications, all of the time, constitutes a disproportionate interference with the very essence of the fundamental right to privacy. It can constitute a form of undemocratic mass surveillance, and can have severe and unjustified repercussions on many other fundamental rights and freedoms, too.

Read more

5. Official opinions

Find resources and opinions about the CSA Regulation from other EU institutions and authorities, as well as relevant national bodies.

EDPB-EDPS Joint Opinion 04/2022 on the Proposal for a Regulation of the European Parliament and of the Council laying down rules to prevent and combat child sexual abuse – 28 July 2022

Proposal for a regulation laying down rules to prevent and combat child sexual abuse: Complementary impact assessment, Study, European Parliamentary Research Service – April 2023

Opinion of the Legal Service, Proposal for a Regulation laying down rules to prevent and combat child sexual abuse – detection orders in interpersonal communications – Articles 7 and 8 of the Charter of Fundamental Rights – Right to privacy and protection of personal data – proportionality – 26 April 2023

Opinion: Impact assessment / Regulation on detection, removal and reporting of child sexual abuse online, and establishing the EU centre to prevent and counter child sexual abuse – 15 February 2022

Opinion from the Houses of the Oireachtas, Joint Committee on Justice, on the application of the principles of Subsidiarity and Proportionality – 30 March 2023

Résolution n° 77 (2022-2023), devenue résolution du Sénat le 20 mars 2023, RÉSOLUTION EUROPÉENNE sur la proposition de règlement du Parlement européen et du Conseil établissant des règles en vue de prévenir et de combattre les abus sexuels sur enfants – COM(2022) 209 final

Antrag auf Stellungnahme gemäß Art. 23e Abs. 3 B-VG der Abgeordneten Mag. Carmen Jeitler-Cincelli, BA, Katharina Kucharowits,

Süleyman Zorba, MMag. Katharina Werner, Bakk.

TOP 1 COM (2022) 209 final Vorschlag für eine Verordnung des Europäischen Parlaments und des Rates zur Festlegung von Vorschriften zur Prävention und Bekämpfung des sexuellen Missbrauchs von Kindern (109099/EU XXVII.GP)

„Chatkontrolle“ – Analyse des Verordnungsentwurfs 2022/0155 (COD) der EU-Kommission

USNESENÍ výboru pro evropské záležitosti, Návrh nařízení o pravidlech pro předcházení pohlavnímu zneužívání dětí a boj proti němu

Motie van het lid Van Ginneken c.s. over zorgen dat het Europese voorstel geen encryptiebedreigende chatcontrol bevat

6. European Parliament position

Here you’ll find information about the Members of the European Parliament (MEPs) leading work on the CSA Regulation, official reports and opinions, key amendments, dates of votes, and any other relevant information.

- The lead committee in the European Parliament is the committee on Civil Liberties, Justice and Home Affairs (LIBE);

- The lead Member of the European Parliament (MEP) (aka ‘rapporteur’) for the LIBE committee is MEP Javier Zarzalejos (Spain, European People’s Party);

- The Internal Market and Consumer Protection (IMCO) committee is an Associated Committee;

- The rapporteur for the IMCO committee is MEP Alex Saliba (Malta, Socialists & Democrats);

- Three committees are opinion-giving committees: the Women’s Rights and Gender Equality (FEMM) committee, the Culture and Education (CULT) committee and the Budgets (BUDG) committee.

- Draft report: ***I Draft report on the proposal for a regulation of the European Parliament and of the Council Laying down rules to prevent and combat child sexual abuse, Committee on Civil Liberties, Justice and Home Affairs, Rapporteur Javier Zarzalejos (19.4.2023), available at: https://www.europarl.europa.eu/doceo/document/LIBE-PR-746811_EN.pdf

- Amendments tabled in LIBE committee:

- Compromise amendments: not publicly available

- Committee vote:

28 September(delayed)9 October 2023(delayed) 26 October 2023 - Plenary: vote or confirmation of mandate expected 8/9 November or w/c 20 November 2023

- Final report: following plenary

- Draft: Draft Opinion (8.2.2023), available at: https://www.europarl.europa.eu/doceo/document/IMCO-PA-740727_EN.pdf

- Final: Opinion of the Committee on the Internal Market and Consumer Protection for the Committee on Civil Liberties, Justice and Home Affairs on the proposal for a regulation of the European Parliament and of the Council Laying down rules to prevent and combat child sexual abuse, Rapporteur for opinion: Alex Agius Saliba (3.7.2023), available at: https://www.europarl.europa.eu/doceo/document/IMCO-AD-740727_EN.pdf

- FEMM: [Final] Opinion of the Committee on Women’s Rights and Gender Equality for the Committee on Civil Liberties, Justice and Home Affairs on the proposal for a regulation of the European Parliament and of the Council Laying down rules to prevent and combat child sexual abuse, Rapporteur for opinion: Heléne Fritzon (29.6.2023), available at: https://www.europarl.europa.eu/doceo/document/FEMM-AD-746640_EN.pdf

- CULT: [Final] Opinion of the Committee on Culture and Education for the Committee on Civil Liberties, Justice and Home Affairs on the proposal for a regulation of the European Parliament and of the Council Laying down rules to prevent and combat child sexual abuse, Rapporteur for opinion: Niyazi Kizilyürek (29.3.2023), available at: https://www.europarl.europa.eu/doceo/document/CULT-AD-737365_EN.pdf

- BUDG: [Final] Opinion of the Committee on Budgets for the Committee on Civil Liberties, Justice and Home Affairs on the proposal for a regulation of the European Parliament and of the Council Laying down rules to prevent and combat child sexual abuse, Rapporteur for opinion: Niclas Herbst (9.6.2023), available at: https://www.europarl.europa.eu/doceo/document/BUDG-AD-740759_EN.pdf

EDRi blogs about the European Parliament's position

-

Voluntary detection measures still on the table for the CSA Regulation

Whilst the draft EU CSA Regulation is intended to replace current voluntary scanning of people's communications with mandatory detection orders, lawmakers in the Council and Parliament are actively considering supplementing this with "voluntary detection orders". However, our analysis finds that voluntary measures would require a legal basis in the CSA Regulation, which would likely fall foul of the Court of Justice. Content warning: contains discussions of child sexual abuse and child sexual abuse material

Read more

-

Press Release: The EU’s Internal Market Committee votes for protecting encryption in the CSA Regulation

The European Union’s Internal Market and Consumer Protection (IMCO) Committee becomes the fourth European Parliament Committee to adopt an opinion on the European Union Child Sexual Abuse (CSA) Regulation, voting to protect encryption and rule out unacceptably risky technologies.

Read more

-

Civil liberties MEPs warn against undermining or circumventing encryption in CSAR

MEPs from the European Parliament’s Civil Liberties committee have thrown down the gauntlet with their amendments to one of the EU’s most controversial proposals: the Child Sexual Abuse Regulation (CSAR). These amendments show a clear majority for fully protecting the integrity of encryption. Content warning: contains discussions of child sexual abuse and child sexual abuse material

Read more

-

LIBE lead MEP fails to find silver bullet for CSA Regulation

On 19 April 2023, the lead MEP on the proposed CSA Regulation, Javier Zarzalejos (EPP), published his draft report. Whilst we agree with MEP Zarzalejos about putting privacy, safety and security by design at the heart, many of his changes may pose a greater risk to human rights online than the European Commission’s original text.

Read more

-

Internal market MEPs wrestle with how to fix Commission’s CSAR proposal

The European Union’s proposed CSA Regulation (Regulation laying down rules to prevent and combat child sexual abuse) is one of the most controversial and misguided European internet laws that we at EDRi have seen. Whilst aiming to protect children, this proposed law from the Commissioner for Home Affairs, Ylva Johansson, would obliterate privacy, security and free expression for everyone online.

Read more

-

Johansson’s address to MEPs shows why the CSA law will fail the children meant to benefit from it

On 10 October 2022, EU Commissioner for Home Affairs, Ylva Johansson, addresses the European Parliament’s Civil Liberties (LIBE) Committee about the proposed EU Child Sexual Abuse Regulation (2022/0155). The address follows months of criticism from civil liberties groups, data protection authorities and even governments due to the risks it poses to everyone’s privacy, security and free expression online.

Read more

7. EU Council position

Here you’ll find information about the timeline of the EU Council of member states, often known as ‘the Council’, including key dates and key publicly-available documents.

- The file has been allocated to the Justice and Home Affairs configuration of the EU Council;

- Preparatory work is being led by the Law Enforcement Working Party (LEWP);

- The EU Council’s General Approach (position) was scheduled to be voted on by Justice and Home Affairs ministers representing each EU member state on 28 September 2023. This vote was not taken, as a significant number of member states had informed the Spanish Presidency of the EU Council that they would not be able to agree to the law in its current form;

- The next opportunity for a vote by the Justice and Home Affairs ministers is 19/20 October 2023. However, a spokesperson confirmed to Politico that no vote is currently scheduled. The schedule for this meeting will be published on 12/13 October;

- The next opportunity for a vote after that will be 4/5 December 2023.

- Leaked notes from German delegation about Law Enforcement Working Party meeting on 14 September 2023, discussing the compromise text of 8 September (in DE) – EU-Rat verschiebt Abstimmung über Chatkontrolle

- Presidency compromise text – 8 September – Document 12611/23

- Presidency compromise text – 1 August 2023 – Document 12066/23

- Presidency compromise text – 16 July 2023 – Document 11518/23

- Presidency Compromise text – 29 June 2023 – Document 10833/23

- Presidency compromise text – 8 June 2023 – Document 9971/23

- Presidency compromise text – 17 May 2023 – Document 9317/23

- Presidency compromise text – 4 May 2023 – Document 8845/23

- Presidency compromise text – 21 April 2023 – Document 7842/23

- Presidency compromise text – 23 March 2023 – Document 7595/23

- Presidency compromise text – 8 March 2023 – Document 7038/23

Document 9512/23

Document 9533/23

Document 8285/23

Document 8833/23

Document WK 10235/2022 ADD 10 REV 2.

Document has not been made public by the Council, but was leaked to Wired.

Additional analysis by Wired available here.

Document 8200/23

Document 7354/23

Document 14862/22

These are all listed in our blog, ‘Is this the most criticised EU law of all time?’

EDRi blogs about the Council of the EU position

-

Council poised to endorse mass surveillance as official position for CSA Regulation

The Council of EU Member States are close to finalising their position on the controversial CSA Regulation. Yet the latest slew of Council amendments – just like the European Commission’s original – endorse measures which amount to mass surveillance and which would fundamentally undermine end-to-end encryption.

Read more

-

Despite warning from lawyers, EU governments push for mass surveillance of our digital private lives

Whilst several EU governments are increasingly alert to why encryption is so important, the Council is split between those that are committed to upholding privacy and digital security in Europe, and those that aren’t. The latest draft Council text does not go anywhere near far enough to make scanning obligations targeted, despite clear warnings from their own lawyers.

Read more

-

The Belgian government is failing to consider human rights in CSA Regulation

Despite the clear warnings, Belgium has taken a position calling on the EU to adopt the CSA regulation as quickly as possible, dismissing the technical problems, and without addressing the serious legal concerns that have been raised.

Read more

-

Irish and French parliamentarians sound the alarm about EU’s CSA Regulation

The Irish parliament’s justice committee and the French Senate have become the latest voices to sound the alarm about the risk of general monitoring of people’s messages in the proposed Child Sexual Abuse (CSA) Regulation.

Read more

-

Who does the EU legislator listen to, if it isn’t the experts?

There's a huge gap between the advice given by experts on combatting child sexual abuse and the legislative proposal of the European Commission.

Read more

-

Member States want internet service providers to do the impossible in the fight against child sexual abuse

In May 2022, the European Commission presented its proposal for a Regulation to combat child sexual abuse (CSA) online. The proposal contains a number of privacy intrusive provisions, including obligations for platforms to indiscriminately scan the private communications of all users (dubbed ”chat control”). There are also blocking obligations for internet services providers (ISPs), which is the focus of this article.

Read more

8. Interim ePrivacy derogation

The CSA Regulation is intended to replace the interim ePrivacy derogation, Regulation (EU) 2021/1232. Learn more about EDRi’s work on the interim ePrivacy derogation and other information about what happened prior to the CSA Regulation being proposed.

- ‘Open Letter: Civil society views on defending privacy while preventing criminal acts‘ (27.10.2020)

- Opinion of the European Data Protection Supervisor (EDPS) on the proposed temporary ePrivacy derogation (10.11.2020)

- European Parliamentary Research Service (EPRS) ‘Targeted substitute impact assessment on the Commission proposal on the temporary derogation from the e-Privacy Directive for the purpose of fighting online child sexual abuse‘ (February 2021)

Blogs and articles about the interim ePrivacy derogation

-

A beginner’s guide to EU rules on scanning private communications: Part 2

Vital EU rules on human rights and on due process protect all of us from unfair, arbitrary or discriminatory interference with our privacy by states and companies. As we await the European Commission’s proposal for a law which we fear may make it mandatory for online chat and email services to scan every person’s private messages all the time, which may constitute mass surveillance, this blog explores what rights-respecting investigations into child sexual abuse material (CSAM) should look like instead.

Read more

-

A beginner’s guide to EU rules on scanning private communications: Part 1

In July 2021, the European Parliament and EU Council agreed temporary rules to allow webmail and messenger services to scan everyone’s private online communications. In 2022, the European Commission will propose a long-term version of these rules. In the first installment of this EDRi blog series on online ‘CSAM’ detection, we explore the history of the file, and why it is relevant for everyone’s digital rights.

Read more

-

It’s official. Your private communications can (and will) be spied on

On 6 July, the European Parliament adopted in a final vote the derogation to the main piece of EU legislation protecting privacy, the ePrivacy Directive, to allow Big Tech to scan your emails, messages and other online communications.

Read more

-

iSpy with my little eye: Apple’s u-turn on privacy sets a precedent and threatens everyone’s security

Apple has just announced significant changes to their privacy settings for messaging and cloud services: first, it will scan all images sent by child accounts; second, it will scan all photos as they are being uploaded to iCloud. With these changes, Apple is threatening everyone’s privacy, security and confidentiality.

Read more

-

Wiretapping children’s private communications: Four sets of fundamental rights problems for children (and everyone else)

On 27 July 2020, the European Commission published a Communication on an EU strategy for a more effective fight against child sexual abuse material (CSAM). As a long-term proposal is expected to be released by this summer, we review some of the fundamental rights issues posed by the initiatives that push for the scan of all private communications.

Read more

-

Is surveilling children really protecting them? Our concerns on the interim CSAM regulation

On 27 July, the European Commission published a Communication on an EU strategy for a more effective fight against child sexual abuse material (CSAM). The Communication indicated several worrying measures that could have devastating effects for your privacy online. The first of these measures is out now.

Read more

9. Terminology

‘CSAM’ stands for ‘Child Sexual Abuse Material’. It’s a term used to refer to videos, photos, and sometimes written or audio content, which depict the sexual solicitation, abuse and exploitation of under-18s. Generally, the term CSAM is used to refer to the online sharing of such material, for example via messages, or in materials that are uploaded to a cloud server.

Sometimes the terms ‘CSEM’ or ‘CSAEM’ are used to specify that “exploitation” is also included.

‘OCSA’ stands for ‘online child sexual abuse’ and is the term used in the EU’s CSA Regulation to include both the material (CSAM) and the associated behaviours, such as grooming.

A derogation is an exception, passed via a legislative process, to carve out provisions of another law that will no longer apply in the specific context in which the derogation operates.

Wikipedia describes lex specialis as follows: “Lex specialis, in legal theory and practice, is a doctrine relating to the interpretation of laws and can apply in both domestic and international law contexts. The doctrine states that if two laws govern the same factual situation, a law governing a specific subject matter (lex specialis) overrides a law governing only general matters (lex generalis).”

10. Contact us

Want to know more about the CSA Regulation or EDRi’s work on it? Contact our advisors working on the file:

Ella Jakubowska

Senior Policy Advisor

E-Mail: ella.jakubowska [at] edri [dot] org

PGP: 8B92 3E96 4E53 83F3 2F92 240A F53D 739E CFDA 2711

Twitter: @ellajakubowska1

Mastodon: @

Diego Naranjo

Head of Policy

E-Mail: diego.naranjo [at] edri [dot] org

PGP: 9A62 189E DB31 1798 6A8E FD45 E320 B10D 3493 8C21

Twitter: @DNBSevilla