New poll exposes public fears over the use of AI by governments in national security

EDRi’s affiliate European Center for Not-for-Profit Law (ECNL) commissioned a survey in 12 EU countries, where a representative sample of the public was asked about their opinion on the use of AI by governments. The poll has exposed public fears and shown stark differences with some of the positions taken by EU countries. Check out the results.

Public opinion has so far been largely missing from discussions on future controls on Artificial Intelligence (AI), including negotiations in Brussels on a new EU AI Act. That is why EDRi’s affiliate European Center for Not-for-Profit Law (ECNL) commissioned a survey in 12 EU countries, where a representative sample of the public was asked about their opinion on the use of AI by governments.

The poll has exposed public fears and shown stark differences with some of the positions taken by EU countries. This includes whether there should be EU-wide controls over AI, which is developed or used for national security

National security means different things to different people, lacking a strict, agreed definition. It is also highly dependent on national government classifications, which could use their definition to label their citizens or interest groups (e.g. climate protestors) as ‘extremists’ or ‘terrorists’. Within the context of using powerful AI tools, such as facial recognition for surveillance, a ‘national security’ exemption in the EU AI Act would be open to abuse and could harm our fundamental rights and freedoms.

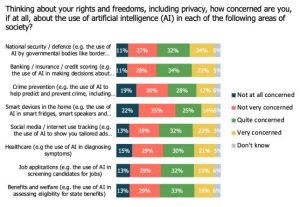

ECNL’s poll results demonstrate that European citizens recognise this threat

Next to banking, people (55%) are most concerned about the application of AI for national security. This surpassed the level of concern they have for AI use in healthcare, recruitment or welfare.

The survey also reveals overwhelming public support for AI rules which fully protect human rights in all circumstances. 70% said that when governments use AI for national security, the rights of individuals and groups should always be respected, without any exceptions.

Visit ECNL’s website for more details. You can read a summary analysis and the responses from 12 countries in the national language here.

(Contribution by: Vanja Skoric, Program Director and David Nichols, Senior Advocacy Advisor, EDRi affiliate, European Center for Not-for-Profit Law)