Biometrics

Biometrics refers to the use of analytical tools and devices which capture, record and/or process people’s physical or behavioural data. This can include faces (commonly known as facial recognition), fingerprints, DNA, irises, walking style (gait), voice as well as other characteristics. Under the EU’s data protection laws, this biometric data is especially sensitive. It is linked to our individual identities, and can also reveal protected and intimate information about who we are, where we go, our health status and more. When used to indiscriminately target people in public spaces, to predict behaviours and emotions, or in situations of imbalances of power, biometric surveillance such as facial recognition has been shown to violate a wide range of fundamental rights.

Filter resources

-

Will MEPs ban Biometric Mass Surveillance in key EU AI Act vote?

The EDRi network and partners have advocated for the EU to ban biometric mass surveillance for over three years through the Reclaim Your Face campaign. On May 11, their call may turn into reality as Members of the European Parliament’s internal markets (IMCO) and civil liberties (LIBE) Committees vote on the AI Act.

Read more

-

Retrospective facial recognition surveillance conceals human rights abuses in plain sight

Following the burglary of a French logistics company in 2019, facial recognition technology (FRT) was used on security camera footage of the incident in an attempt to identify the perpetrators. In this case, the FRT system listed two hundred people as potential suspects. From this list, the police singled out ‘Mr H’ and charged him with the theft, despite a lack of physical evidence to connect him to the crime. The judge decided to rely on this notoriously discriminatory technology, sentencing Mr H to 18 months in prison.

Read more

-

France becomes the first European country to legalise biometric surveillance

EDRi member and Reclaim Your Face partner La Quadrature du Net charts out the chilling move by France to undermine human rights progress by ushering in mass algorithmic surveillance, which in a shocking move, has been authorised by national Parliamentarians.

Read more

-

Protect My Face: Brussels residents join the fight against biometric mass surveillance

The newly-launched Protect My Face campaign gives residents of the Brussels region of Belgium the opportunity to oppose mass facial recognition. EDRi applauds this initiative which demands that the Brussels Parliament ban these intrusive and discriminatory practices.

Read more

-

Open Letter: The AI video surveillance measures in the Olympics Games 2024 law violate human rights

In an open letter, EDRi, ECNL, La Quadrature du Net, Amnesty International France and 34 civil society organisations call on the French Parliament to reject Article 7 of the proposed law on the 2024 Olympics and Paralympic Games.

Read more

-

The secret services’ reign of confusion, rogue mayors, racist tech and algorithm oversight (or not)

Have a quick read through January’s most interesting developments at the intersection of human rights and technology from the Netherlands.

Read more

-

2023: Important consultations for your Digital Rights!

Public consultations are an opportunity to influence future legislation at an early stage, in the European Union and beyond. They are your opportunity to help shaping a brighter future for digital rights, such as your right to a private life, data protection, or your freedom of opinion and expression.

Read more

-

SERBIA: Government retracts again on biometric surveillance

Another attempt to legalise mass biometric surveillance in Serbia was ditched by the government in a sudden U-turn just as 2022 was drawing to a close. In a little over a year this was the second draft law on internal affairs failing to pass the public hearing stage before its formal introduction to the parliamentary vote.

Read more

-

Emotion (Mis)Recognition: is the EU missing the point?

The European Union is on the cusp of adopting a landmark legislation, the Artificial Intelligence Act. The law aims to enable an European AI market which guarantees safety, and puts people at its heart. But an incredibly dangerous aspect remains largely unaddressed - putting a stop to Europe’s burgeoning 'emotion recognition' market.

Read more

-

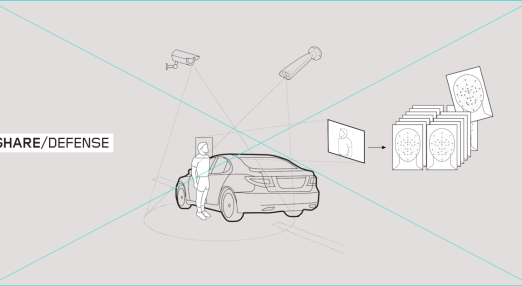

Phone unlocking vs biometric mass surveillance: what’s the difference?

Facial recognition is one of the most hotly-debated topics in the European Union’s (EU) Artificial Intelligence Act. Lawmakers are more aware than ever of the risks posed by automated surveillance systems which pervasively track our faces – as well as our bodies and movements - across time and place. This can amount to biometric mass surveillance (BMS), which undermines our anonymity and freedom, and weaponises our faces and bodies against us. The article explores the types of biometric technology and their implications.

Read more

-

Remote biometric identification: a technical & legal guide

Lawmakers are more aware than ever of the risks posed by automated surveillance systems which track our faces, bodies and movements across time and place. In the EU's AI Act, facial and other biometric systems which can identify people at scale are referred to as 'Remote Biometric Identification', or RBI. But what exactly is RBI, and how can you tell the difference between an acceptable and unacceptable use of a biometric system?

Read more

-

What are the provisions of new policing draft laws

The SHARE Foundation has consistently advocated against the legalisation of mass, indiscriminate biometric surveillance for the past four years, particularly during the consultation process launched upon the withdrawal of the first Draft Law on Internal Affairs. A new draft with old fundamental issues is now before us. The public hearing is open until the end of December.

Read more