#ProtectNotSurveil: EU must ban AI uses against people on the move

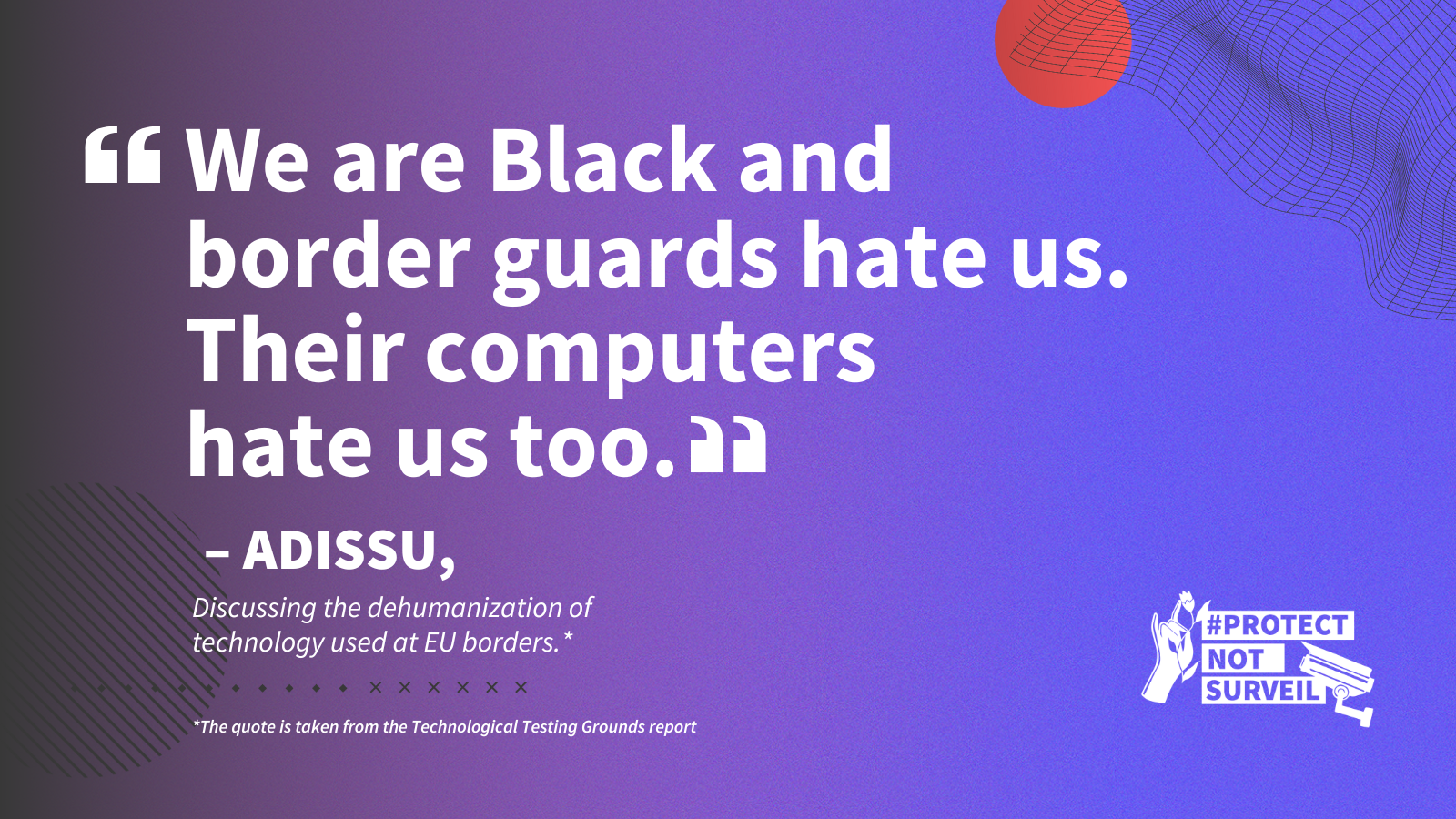

As the European Parliament regulates the most harmful AI technologies, a coalition of civil society calls on the EU to #ProtectNotSurveil people on the move.

From AI lie-detectors, AI risk profiling systems used to assess ‘risky’ movement, and AI for illegal pushbacks, to the rapidly expanding tech-surveillance complex at Europe’s borders, AI systems are increasingly a feature of migration management in the EU.

As the European Parliament negotiates its position on the Artificial Intelligence Act (‘AI act’) a coalition of civil society organisations has called on negotiators to better protect the rights of people on the move.

AI isn't neutral or objective! It supercharges human rights abuses that are already happening. Join our #ProtectNotSurveil campaign to ensure that the AI Act protects everyone.

Learn moreHow is AI used in migration control?

In the migration context, AI is used to make predictions, assessments and evaluations about people in the context of their migration claims. Of particular concern is the use of AI to assess whether people on the move present a ‘risk’ of illegal activity or security threats. AI systems in this space are inherently discriminatory, pre-judging people on the basis of factors outside of their control. Along with AI lie detectors, polygraphs and emotion recognition, we see how AI is being used and developed within a broader framework of racialised suspicion against migrants.

We also increasingly see AI systems used for ‘digital pushbacks’ – to predict and interdict movements of people travelling to Europe to seek asylum or otherwise make migration claims. As documented by the Border Violence Monitoring Network, there have been recorded incidents of the use of new technologies to facilitate forced disappearances of migrants and human rights defenders in a total of 15 countries (including 10 EU Member States.)

“Since 2017, BVMN has recorded 33 testimonies recording the use of drones to locate and apprehend migrants and asylum seekers during pushback operations affecting an estimated 1,004 persons.” – Border Violence Monitoring Network, EU Member States’ Use of New Technologies in Enforced Disappearances

What should the EU do?

As the European Parliament negotiates its position on the AI Act, we are calling for the AI Act to be updated in three main ways to address AI-related harms in the migration context:

- Prohibit the use of AI for digital pushbacks and discriminatory risk assessments as ‘unacceptable uses’ of AI (Article 5 AI Act). This should include prohibitions on:

- AI-based individual risk assessment and profiling systems in the migration contexts drawing on personal and sensitive data

- predictive analytic systems when used to interdict, curtail and prevent migration

- Include within ‘high-risk’ use cases AI systems in migration control (Annex III) including: all other AI-based risk assessments; predictive analytic systems used in migration, asylum and border control management; biometric identification systems; and AI systems used for monitoring and surveillance in border control.

- Amend Article 83 to ensure AI as part of large-scale EU IT databases are within the scope of the AI Act and that the necessary safeguards apply to uses of AI in the EU migration context.

Visual identity of the campaign: Vidushi Yadav, Illustrator and visual designer