2020 is the year the EU ‘woke up’ to structural racism. The murder of George Floyd on the 25th May spurred global uprisings, penetrating even the EU’s political capital. For the first time in decades, we have witnessed a substantive conversation on racism at the highest level of EU decision-making. In June this year, Ursula von der Leyen frankly said that ‘we need to talk about racism’.

Since then, the furore in Brussels has settled down. Now it’s time put words and commitments into action that would impact black and brown lives. Commissioners had announced an Action Plan on Racism in September to detail the EU’s response.

Structural racism appears in policy areas from health, employment to climate. It is increasingly clear that digital and technology policy is also not race-neutral, and needs to be addressed through a racial justice lens.

2021 will be a flagship year for EU digital legislation. From the preparation of the Digital Services Act to the EU’s upcoming legislative proposal on artificial intelligence, the EU will look to balance the aims of ‘promoting innovation’ with ensuring technology is ‘trustworthy’ and ‘human-centric’.

Techno-racism

New technologies are increasingly procured and deployed as a response to complex social problems – particularly in the public sphere – in the name of ‘innovation’ and enhanced ‘efficiency’.

Yet, the impact of these technologies on people of colour, particularly in the fields of policing, migration control, social security, and employment, is systematically overlooked.

Increasing evidence demonstrates how emerging technologies may not only exacerbate existing inequalities, but in effect differentiate, target and experiment on communities at the margins – racialised people, undocumented migrants, queer communities, and those with disabilities.

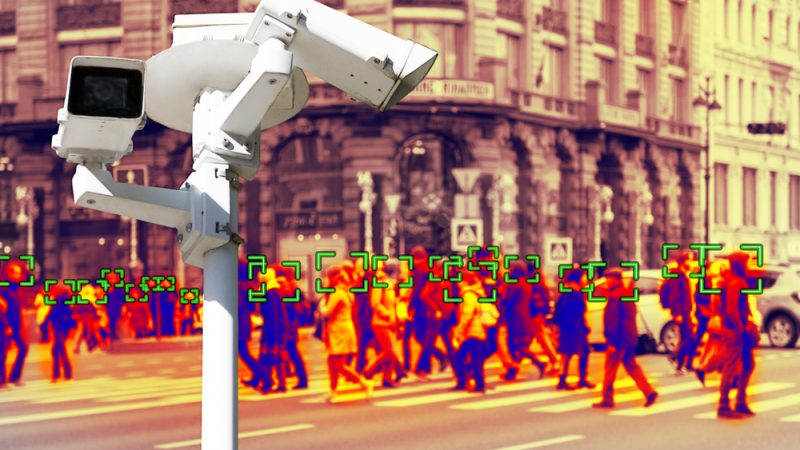

In particular, racialised communities are disproportionately affected by surveillance, (data-driven) profiling, discrimination online and other digital rights violations.

Automated (over) policing

A key focus of racial justice and digital rights advocates will be the extent to which the upcoming legislation addresses deployments of technology by police and immigration enforcement. More and more cases across Europe reveal how technologies deployed in the field of law enforcement are discriminatory.

The increased use of both place-based and person-based “predictive policing” technologies to forecast where, and by whom, a narrow type of crimes are likely to be committed, repeatedly score racialised communities with a higher likelihood of presumed future criminality.

Most of these systems are enabled by vast databases containing detailed information about certain populations. Various matrixes, including the Gangs Matrix, ProKid-12 SI and the UK’s National Data Analytics Solutions, designed for monitoring and data collection on future crime and ‘gangs’ in effect target Black, Brown and Roma men and boys, highlighting discriminatory patterns on the base of race and class.

At the EU level, the development of mass-scale, interoperable repositories of biometric data such as facial recognition and fingerprints, to facilitate immigration control has only increased the vast privacy infringements against undocumented people and racialised migrants.

Not only do predictive policing systems and uses of AI at the border up-end the presumption of innocence and right to privacy, but they also codify racist assumptions linking certain races to crime and suspicious activity.

Predictive policing systems redirect policing toward certain areas and people, increasing the likelihood of (potentially violent and sometimes deadly) encounters with the police.

Banning impermissable use

We are witnessing a growing public awareness of the need to do away with racist and classist technological systems. Last month, Foxglove and the Joint Council for the Welfare of immigrants forced the UK Home Office to abandon its racist visa algorithm with litigation.

Even larger technology and security firms have made concessions. In direct response to #BlackLivesMatter, major firms such as IBM and Microsoft proposed to temporarily halt collaboration with law enforcement for the use of facial recognition technology.

On the basis of overwhelming evidence, the UN Special Rapporteur on contemporary forms of racism recommended that member states prohibit the use of technologies with a racially discriminatory impact. In drafting its upcoming legislation, the EU must not avoid taking this stance.

Some EU political leaders have acknowledged the extent to which certain AI systems may pose a danger to racialised communities in Europe.

Speaking in June this year, European Commission Vice-President Vestager warned against predictive policing tools, arguing that ‘immigrants and people belonging to certain ethnic groups might be targeted’ by these deployments.

This week, the IMCO Committee voted on an opinion declaring a high risk of abuse of technologies such as facial recognition and other technologies dividing people into categories of risk. Voices in the European Parliament are tentatively heeding the calls for bans of discriminatory technologies.

It’s difficult to predict what impact these revelations will have at EU level. While the EU’s White Paper on artificial intelligence recognises the need to address discrimination within AI, it did not make bold promises for the prohibition of discriminatory systems.

Yet, without clear legal limits, including the prohibition of the most harmful uses of AI, discriminatory technologies will not be fixed. To protect the rights and freedoms of everyone in Europe, we ask the European Commission to effectively tackle racism perpetuated by technology.

This op-ed was first published in Euractiv here. It was written by Sarah Chander, Senior Policy Advisor at EDRi.