EU privacy regulators and Parliament demand AI and biometrics red lines

In their Joint Opinion on the AI Act, the EDPS and EDPB “call for [a] ban on [the] use of AI for automated recognition of human features in publicly accessible spaces, and some other uses of AI that can lead to unfair discrimination”. Taking the strongest stance yet, the Joint Opinion explains that “intrusive forms of AI – especially those who may affect human dignity – are to be seen as prohibited” on fundamental rights grounds.

Filter resources

-

EU privacy regulators and Parliament demand AI and biometrics red lines

In their Joint Opinion on the AI Act, the EDPS and EDPB “call for [a] ban on [the] use of AI for automated recognition of human features in publicly accessible spaces, and some other uses of AI that can lead to unfair discrimination”. Taking the strongest stance yet, the Joint Opinion explains that “intrusive forms of AI – especially those who may affect human dignity – are to be seen as prohibited” on fundamental rights grounds.

Read more

-

Biometric mass surveillance flourishes in Germany and the Netherlands

In a new research report, EDRi reveals the shocking extent of biometric mass surveillance practices in Germany, the Netherlands and Poland which are taking over our public spaces like train stations, streets, and shops. The EU and its Member States must act now to set clear legal limits to these practices which create a state of permanent monitoring, profiling and tracking of people.

Read more

-

Booklet: Depths of biometric mass surveillance in Germany, the Netherlands and Poland

In a new research report, EDRi reveals the shocking extent of unlawful biometric mass surveillance practices in Germany, the Netherlands and Poland which are taking over our public spaces like train stations, streets, and shops. The EU and its Member States must act now to set clear legal limits to these practices which create a state of permanent monitoring, profiling and tracking of people.

Read more

-

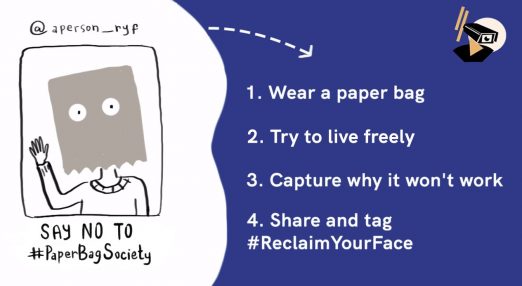

The #PaperBagSociety challenge

The #PaperBagSociety is a social media challenge part of the #ReclaimYourFace campaign that invites everyone to share online the impact of living life with a paperbag on the head. With it, we aim to raise awareness of how ridiculous is to avoid facial recognition technologies in public spaces and why we need to build an alternative future, free from biometric mass surveillance.

Read more

-

Workplace, public space: workers organising in the age of facial recognition

‘Surveillance capitalism’ is increasingly threatening workers’ collective action and the human right to public protest.

Read more

-

EDRi joins 178 organisations in global call to ban biometric surveillance

From protesters taking to the streets in Slovenia, to the subways of São Paulo; from so-called “smart cities” in India, to children entering French high schools; from EU border control experiments, to the racialised over-policing of people of colour in the US. In each of these examples, people around the world are increasingly and pervasively being subjected to toxic biometric surveillance. This is why EDRi has joined the global Ban Biometric Surveillance coalition, to build on our work in Europe as part of the powerful Reclaim Your Face campaign.

Read more

-

The urgent need to #reclaimyourface

The rise of automated video surveillance is often touted as a quick, easy, and efficient solution to complex societal problems. In reality, roll-outs of facial recognition and other biometric mass surveillance tools constitute a systematic invasion into people’s fundamental rights to privacy and data protection. Like with uses of toxic chemicals, these toxic uses of biometric surveillance technologies need to be banned across Europe.

Read more

-

New win against biometric mass surveillance in Germany

In November 2020, reporters at Netzpolitik.org revealed that the city of Karlsruhe wanted to establish a smart video surveillance system in the city centre. The plan involved an AI system that would analyse the behaviour of passers-by and automatically identify conspicuous behaviour. After the intervention of EDRi-member CCC the project was buried in May 2021.

Read more

-

Challenge against Clearview AI in Europe

This legal challenge relates to complaints filed with 5 European data protection authorities against Clearview AI, Inc. ("Clearview"), a facial recognition technology company building a gigantic database of faces.

Read more

-

From ‘trustworthy AI’ to curtailing harmful uses: EDRi’s impact on the proposed EU AI Act

Civil society has been the underdog in the European Union's (EU) negotiations on the artificial intelligence (AI) regulation. The goal of the regulation has been to create the conditions for AI to be developed and deployed across Europe, so any shift towards prioritising people’s safety, dignity and rights feels like a great achievement. Whilst a lot needs to happen to make this shift a reality in the final text, EDRi takes stock of it’s impact on the proposed Artificial Intelligence Act (AIA). EDRi and partners mobilised beyond organisations traditionally following digital initiatives managing to establish that some uses of AI are simply unacceptable.

Read more

-

Can a COVID-19 face mask protect you from facial recognition technology too?

Mass facial recognition risks our collective futures and shapes us into fear-driven societies of suspicion. This got folks at EDRi and Privacy International brainstorming. Could the masks that we now wear to protect each other from Coronavirus also protect our anonymity, preventing the latest mass facial recognition systems from identifying us?

Read more

-

Washed in blue: living lab Digital Perimeter in Amsterdam

An increasing amount of Dutch government agencies seem to resort to so-called ‘living labs’ and ‘field labs’ in order to test and experiment with technological innovations in a realistic setting. In recent years, these live laboratories have proven to be a useful stepping stone to introduce new technologies into public space. In the last several weeks, EDRi's member Bits of Freedom took a closer look at one of those living labs – the so-called Digital Perimeter surrounding the Johan Cruijff ArenA in Amsterdam – and were not pleased with what they saw.

Read more