Our work

EDRi is the biggest European network defending rights and freedoms online. We work to to challenge private and state actors who abuse their power to control or manipulate the public. We do so by advocating for robust and enforced laws, informing and mobilising people, promoting a healthy and accountable technology market, and building a movement of organisations and individuals committed to digital rights and freedoms in a connected world.

Filter resources

-

#PrivacyCamp25: Registrations open and call for sessions deadline!

In 2025, we are excited to host you online and at La Tricoterie, Brussels on 30 September to explore Resilience and Resistance in Times of Deregulation and Authoritarianism. Register now and join us for one of the flagship digital rights gatherings in Europe.

Read more

-

EDRi-gram, 10 July 2025

What has the EDRis network been up to over the past two weeks? Find out the latest digital rights news in our bi-weekly newsletter. In this edition: European Commission must champion the AI Act, EDRi pushes back against risky GDPR deregulation, & more!

Read more

-

New research shows online platforms use manipulative design to influence users towards harmful choices

New research by Bits of Freedom investigated social media platforms Facebook, Snapchat and TikTok, and e-commerce platforms Shein, Zalando and Booking.com for their use of manipulative design. The worrying findings indicate that these platforms continue to nfluence the choices of users to their detriment despite being prohibited by laws.

Read more

-

A missed opportunity for enforcement: what the final GDPR Procedural Regulation could cost us

After years of debate, the GDPR Procedural Regulation has been finalised. Despite some improvements, the final text may entrench old problems and create new ones, undermining people’s rights and potentially opening the door to weakening the GDPR itself.

Read more

-

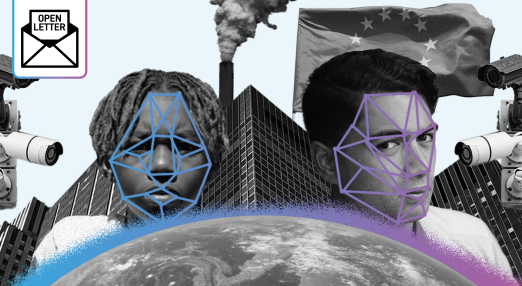

Open letter: European Commission must champion the AI Act amidst simplification pressure

52 civil society organisations, experts and academics have written to the European Commission to express their concerns about growing pressure to suspend or delay the implementation and enforcement of the Artificial Intelligence (AI) Act. Instead of unraveling the EU rulebook, which includes hard-won legal protections for people, the Commission should focus on the full implementation and proper enforcement of its rules, like the AI Act.

Read more

-

Undermining the GDPR through ‘simplification’: EDRi pushes back against dangerous deregulation

EDRi has responded to the European Commission’s consultation on the GDPR ‘simplification’ proposal. The plan to remove documentation safeguards under Article 30(5) risks weakening security, legal certainty and rights enforcement, and opens the door to broader deregulation of the EU’s digital rulebook.

Read more

-

#PrivacyCamp25: Call for Sessions open

Our rights and freedoms – online and offline – are facing unprecedented threats. Recognising this as a collective struggle, we want to explore the theme Resilience and Resistance in Times of Deregulation and Authoritarianism for this edition of Privacy Camp. The 13th edition of Privacy Camp is set to take place on 30 September 2025.

Read more

-

EDRi-gram, 25 June 2025

What has the EDRis network been up to over the past two weeks? Find out the latest digital rights news in our bi-weekly newsletter. In this edition: The case for a spyware ban, EDRi 2025-2030 strategy, EU must reassess Israel’s adequacy status, & more!

Read more

-

Joint civil society response to the Commission’s call for evidence: Impact assessment on data retention by service providers for criminal proceedings

Last week, the EDRi network expressed shared concerns about the introduction of new rules at EU level on the retention of data by service providers for law enforcement purposes.

Read more

-

The EDRi network adopts its 2025-2030 Strategy

The EDRi network adopted its 2025-2030 strategy at the General Assembly in Paris in May 2025. In this blogpost, EDRi’s Executive Director, Claire Fernandez, lays out the year-long journey and the work on many people it took to get us to this important milestone, and some highlights from our objectives and approach moving forward.

Read more

-

Data flows and digital repression: Civil society urges EU to reassess Israel’s adequacy status

On 24 June 2025, EDRi, Access Now and other civil society organisations sent a second letter to the European Commission, urging it to reassess Israel’s data protection adequacy status under the GDPR. The letter outlines six categories of concerns linking Israel’s data practices to escalating human rights violations in Gaza and the West Bank.

Read more

-

OPEN LETTER: The European Commission must act now to defend fundamental rights in Hungary

With Budapest Pride set to take place on June 28, 2025, EDRi and 46 organisation are urging the European Commission to defend fundamental rights in Hungary so that Pride organisers and participants can safely exercise their right to peaceful assembly and freedom of expression.

Read more