The Clearview/Ukraine partnership – How surveillance companies exploit war

Clearview announced it will offer its surveillance tech to Ukraine. It seems no human tragedy is off-limits to surveillance companies looking to sanitise their image.

Filter resources

-

The Clearview/Ukraine partnership – How surveillance companies exploit war

Clearview announced it will offer its surveillance tech to Ukraine. It seems no human tragedy is off-limits to surveillance companies looking to sanitise their image.

Read more

-

EU AI Act needs clear safeguards for AI systems for military and national security purposes

EDRi affiliate ECNL presents the second set of their proposals on exemptions and exclusions of AI used for military and national security purposes from the AIA, also endorsed by European Digital Rights (EDRi), Access Now, AlgorithmWatch, ARTICLE 19, Electronic Frontier Finland (EFFI), Electronic Privacy Information Center (EPIC) and Panoptykon Foundation.

Read more

-

Italian DPA fines Clearview AI for illegally monitoring and processing biometric data of Italian citizens

On 9 March 2022, the Italian Data Protection Authority fined the US-based facial recognition company Clearview AI EUR 20 million after finding that the company monitored and processed biometric data of individuals on Italian territory without a legal basis. The fine is the highest expected according to the General Data Protection Regulation, and it was motivated by a complaint sent by the Hermes Centre in May 2021 in a joint action with EDRi members Privacy International, noyb, and Homo Digitalis—in addition to complaints sent by some individuals and to a series of investigations launched in the wake of the 2020 revelations of Clearview AI business practices.

Read more

-

The EU AI Act and fundamental rights: Updates on the political process

The negotiations of the EU’s Artificial Intelligence Act (AIA) are finally taking shape. With lead negotiators named, the publication of Council compromises, and the formation of civil society coalitions on the AIA, 2022 will be an important year for the regulation of AI systems.

Read more

-

The European Commission does not sufficiently understand the need for better AI law

The Dutch Senate shares the concerns Bits of Freedom has about the Artificial Intelligence Act and wrote a letter to the European Commission about the need to better protect people from harmful uses of AI such as through biometric surveillance. The Commission has given a response to this which is not exactly reassuring.

Read more

-

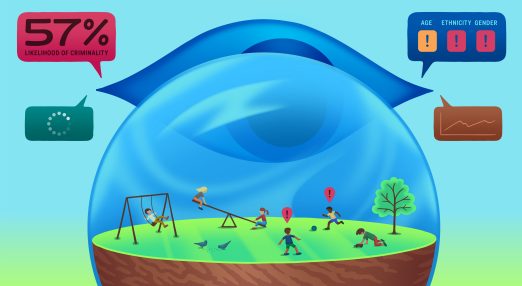

Civil society calls on the EU to ban predictive AI systems in policing and criminal justice in the AI Act

40+ civil society organisations, led by Fair Trials and European Digital Rights (EDRi) are calling on the EU to ban predictive systems in policing and criminal justice in the Artificial Intelligence Act (AIA).

Read more

-

Short Film “Reclaim Your Face”: the harms of Biometric Mass Surveillance to society

‘Biometric Mass Surveillance pose a danger to society’ is the main message of Alexander Lehmann's latest short film. And there is no better place to premier this movie than at the last Chaos Computer Club's end of the year event rC3. The film "Reclaim Your Face" highlights the issues surrounding biometric mass surveillance and underlines the harms that its’ systems pose to our society.

Read more

-

2022: Important consultations for your Digital Rights!

Public consultations are an opportunity to influence future legislation at an early stage, in the European Union and beyond. They are your opportunity to help shaping a brighter future for digital rights, such as your right to a private life, data protection, or your freedom of opinion and expression.

Read more

-

European court supports transparency in risky EU border tech experiments

The Court of Justice of the European Union has ruled that the European Commission must reveal initially-withheld documents relating to the controversial iBorderCtrl project, which experimented with risky biometric ‘lie detection’ systems at EU borders. However, the judgement continued to safeguard some of the commercial interests of iBorderCtrl, despite it being an EU-funded migration technology with implications for the protection of people’s rights.

Read more

-

Reclaim Your Face impact in 2021

A sturdy coalition, research reports, investigations, coordination actions and gathering amazing political support at national and EU level. This was 2021 for the Reclaim Your Face coalition – a year that, despite happening in a pandemic – showed what the power of a united front looks like.

Read more

-

UN Special Rapporteurs challenge EU’s counter-terrorism plans

Through their communication, the Special Rapporteurs demonstrate how several existing and foreseen EU security measures fail to meet the principles of legality, necessity and proportionality, enshrined in European and international laws (such as the Regulation on preventing the dissemination of Terrorism Content Online and the processing by Europol of sensitive data for profiling purposes). The fatal flaw lies in the use of broad and undefined terms to justify extensive interferences in human rights.

Read more

-

The ICO provisionally issues £17 million fine against facial recognition company Clearview AI

Following EDRi member Privacy International's (PI) submissions before the UK Information Commissioner's Office (ICO), as well as other European regulators, the ICO has announced its provisional intent to fine Clearview AI.

Read more