Intensified surveillance at EU borders: EURODAC reform needs a radical policy shift

In an open letter addressed to the European Parliament Civil Liberties, Justice and Home Affairs Committee, 34 organisations protecting the rights of people on the move, children and digital rights including European Digital Rights (EDRi) urge policymakers to radically change the direction of the EURODAC reform – the European Union (EU) database storing asylum seekers’ and migrants’ personal data - in order to respect fundamental rights and international law.

Filter resources

-

Intensified surveillance at EU borders: EURODAC reform needs a radical policy shift

In an open letter addressed to the European Parliament Civil Liberties, Justice and Home Affairs Committee, 34 organisations protecting the rights of people on the move, children and digital rights including European Digital Rights (EDRi) urge policymakers to radically change the direction of the EURODAC reform – the European Union (EU) database storing asylum seekers’ and migrants’ personal data - in order to respect fundamental rights and international law.

Read more

-

UK Investigatory Powers Tribunal finds the regime for bulk communications data to be incompatible with EU law

The UK Investigatory Powers Tribunal issued a declaration in EDRi member Privacy International's challenge to the bulk communications data regime, finding UK legislation to be incompatible with EU law.

Read more

-

How a rotten Apple (and bad legislation) could spoil our private communications

In August 2021, Apple announced significant changes to their privacy settings for messaging and cloud services, only to “pause” it in early September. Earlier this summer, the European Parliament adopted in a final vote the derogation to the main piece of EU legislation protecting privacy, the ePrivacy Directive, to allow Big Tech to scan your emails, messages, and other online communications.In August 2021, Apple announced significant changes to their privacy settings for messaging and cloud services, only to “pause” it in early September. Earlier this summer, the European Parliament adopted in a final vote the derogation to the main piece of EU legislation protecting privacy, the ePrivacy Directive, to allow Big Tech to scan your emails, messages, and other online communications.

Read more

-

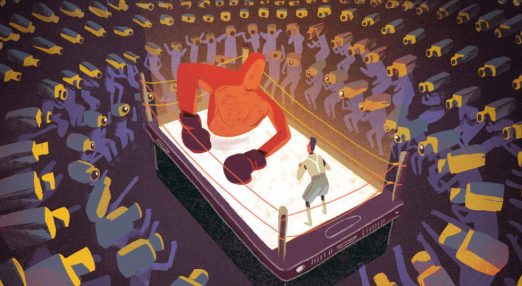

Move fast and break Big Tech’s power

The surveillance-based business model of the dominant technology companies is based on extracting as much personal information and profiling as possible to target individuals, on- and offline. Over time, Big Tech corporations build a frighteningly detailed picture about billions of individuals—and that knowledge directly translates into (market) power.

Read more

-

EDRi submits response to the European Commission AI adoption consultation

Today, 3rd of August 2021, European Digital Rights (EDRi) submitted its response to the European Commission’s adoption consultation on the Artificial Intelligence Act (AIA).

Read more

-

EDRi joins coalition demanding that states implement a moratorium on the sale, transfer & use of surveillance technology

In this joint open letter, 146 civil society organisations and 28 independent experts worldwide call on states to implement an immediate moratorium on the sale, transfer and use of surveillance technology.

Read more

-

Joint open letter by civil society organizations and independent experts calling on states to implement an immediate moratorium on the sale, transfer and use of surveillance technology

In this joint open letter, 156 civil society organizations and 26 independent experts worldwide call on states to implement an immediate moratorium on the sale, transfer and use of surveillance technology.

Read more

-

No place for emotion recognition technologies in Italian museums

An Italian museum trials emotion recognition systems, despite the practice being heavily criticised by data protection authorities, scholars and civil society. The ShareArt system collects, among others, age, gender and emotions of people. EDRi member Hermes Center called the DPA for an investigation.

Read more

-

EU privacy regulators and Parliament demand AI and biometrics red lines

In their Joint Opinion on the AI Act, the EDPS and EDPB “call for [a] ban on [the] use of AI for automated recognition of human features in publicly accessible spaces, and some other uses of AI that can lead to unfair discrimination”. Taking the strongest stance yet, the Joint Opinion explains that “intrusive forms of AI – especially those who may affect human dignity – are to be seen as prohibited” on fundamental rights grounds.

Read more

-

It’s official. Your private communications can (and will) be spied on

On 6 July, the European Parliament adopted in a final vote the derogation to the main piece of EU legislation protecting privacy, the ePrivacy Directive, to allow Big Tech to scan your emails, messages and other online communications.

Read more

-

Biometric mass surveillance flourishes in Germany and the Netherlands

In a new research report, EDRi reveals the shocking extent of biometric mass surveillance practices in Germany, the Netherlands and Poland which are taking over our public spaces like train stations, streets, and shops. The EU and its Member States must act now to set clear legal limits to these practices which create a state of permanent monitoring, profiling and tracking of people.

Read more

-

Booklet: Depths of biometric mass surveillance in Germany, the Netherlands and Poland

In a new research report, EDRi reveals the shocking extent of unlawful biometric mass surveillance practices in Germany, the Netherlands and Poland which are taking over our public spaces like train stations, streets, and shops. The EU and its Member States must act now to set clear legal limits to these practices which create a state of permanent monitoring, profiling and tracking of people.

Read more