Artificial intelligence (AI)

Artificial intelligence (AI) refers to a broad range of processes and technologies enabling computers to complement or replace tasks otherwise performed by humans. Such systems have the ability to exacerbate surveillance and intrusion into our personal lives, reflect and reinforce some of the deepest societal inequalities, fundamentally alter the delivery of public and essential services, undermine vital data protection legislation, and disrupt the democratic process itself. In the face of this, EDRi strives to uphold our fundamental rights, democracy, equality and justice in all legislation, policy and practice related to artificial intelligence.

Filter resources

-

The EU must respect human rights of migrants in the AI Act

Amnesty International Secretary General Agnès Callamard has sent an open letter calling on the Rapporteurs and members of leading committees on the EU Artificial Intelligence Act (AI Act) to prohibit the use of certain artificial intelligence (AI) systems which are incompatible with human rights of migrants, refugees, and asylum seekers in the AI Act.

Read more

-

Missing: people’s rights in the EU Digital Decade

In June 2023, the European Union (EU) will adopt its first report on the state of the ‘Digital Decade’ – a plan launched in 2022 with digitalisation targets for business, public services and people’s digital skills. The Digital Decade reads more like a business plan than a policy programme.

Read more

-

EU Parliament sends a global message to protect human rights from AI

Today, the Internal Market Committee (IMCO) and the Civil Liberties Committee (LIBE) committees took several important steps to make this landmark legislation more people-focused by banning AI systems used for biometric surveillance, emotion recognition and predictive policing. Disappointingly, the MEPs stopped short of protecting the rights of migrants.

Read more

-

Will MEPs ban Biometric Mass Surveillance in key EU AI Act vote?

The EDRi network and partners have advocated for the EU to ban biometric mass surveillance for over three years through the Reclaim Your Face campaign. On May 11, their call may turn into reality as Members of the European Parliament’s internal markets (IMCO) and civil liberties (LIBE) Committees vote on the AI Act.

Read more

-

Where artificial intelligence and climate action meet

The use of artificial intelligence (AI) has a major influence on climate action, climate change mitigation and the work of environmental defenders. It offers potential benefits, for example when it is used to enhance high-resolution mapping of deforestation, coral reef loss, and soil erosion. On the other hand, it poses a threat to the climate and its defenders when it leads to extraction of natural resources and when automated online surveillance is used to enhance the power of states and corporations to suppress climate activism and grassroots resistance.

Read more

-

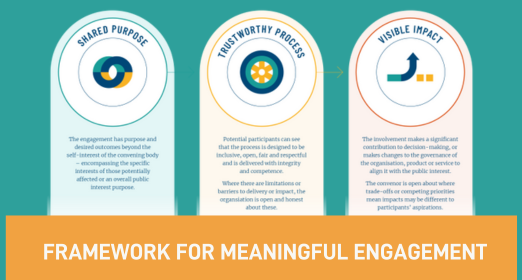

Moving from empty buzzwords to real empowerment: a framework for enabling meaningful engagement of external stakeholders in AI

The use of artificial intelligence (AI) is accelerating. So is the need to ensure that AI systems are not only effective, but also fair, non-discriminatory, transparent, rights-based, accountable, and sustainable – in short, responsible

Read more

-

As AI Act vote nears, the EU needs to draw a red line on racist surveillance

The EU Artificial Intelligence Act, commonly known as the AI Act, is the first of its kind. Not only will it be a landmark as the first binding legislation on AI in the world – it is also one of the first tech-focused laws to meaningfully address how technologies perpetuate structural racism.

Read more

-

Retrospective facial recognition surveillance conceals human rights abuses in plain sight

Following the burglary of a French logistics company in 2019, facial recognition technology (FRT) was used on security camera footage of the incident in an attempt to identify the perpetrators. In this case, the FRT system listed two hundred people as potential suspects. From this list, the police singled out ‘Mr H’ and charged him with the theft, despite a lack of physical evidence to connect him to the crime. The judge decided to rely on this notoriously discriminatory technology, sentencing Mr H to 18 months in prison.

Read more

-

Civil society urges European Parliament to protect people’s rights in the AI Act

In the run up to the AI Act vote in the European Parliament, civil society organisations call on the European Parliament to prioritise fundamental rights and protect people affected by artificial intelligence systems.

Read more

-

Open Letter: The AI video surveillance measures in the Olympics Games 2024 law violate human rights

In an open letter, EDRi, ECNL, La Quadrature du Net, Amnesty International France and 34 civil society organisations call on the French Parliament to reject Article 7 of the proposed law on the 2024 Olympics and Paralympic Games.

Read more

-

The secret services’ reign of confusion, rogue mayors, racist tech and algorithm oversight (or not)

Have a quick read through January’s most interesting developments at the intersection of human rights and technology from the Netherlands.

Read more

-

2023: Important consultations for your Digital Rights!

Public consultations are an opportunity to influence future legislation at an early stage, in the European Union and beyond. They are your opportunity to help shaping a brighter future for digital rights, such as your right to a private life, data protection, or your freedom of opinion and expression.

Read more