EU AI Act Trilogues: Status of Fundamental Rights Recommendations

As the EU AI Act negotiations continue, a number of controversial issues remain open. At stake are vital issues including the extent to which general purpose/foundation models are regulated, but also crucially, how far does the AI Act effectively prevent harm from the use of AI for law enforcement, migration, and national security purposes.

Filter resources

-

EU AI Act Trilogues: Status of Fundamental Rights Recommendations

As the EU AI Act negotiations continue, a number of controversial issues remain open. At stake are vital issues including the extent to which general purpose/foundation models are regulated, but also crucially, how far does the AI Act effectively prevent harm from the use of AI for law enforcement, migration, and national security purposes.

Read more

-

Unchecked AI will lead us to a police state

Across Europe, police, migration and security authorities are seeking to develop and use AI in increasing contexts. From the planned use of AI-based video surveillance at the 2024 Paris Olympics, to the millions of EU funds invested in AI based surveillance at Europe’s borders, AI systems are more and more part of the state surveillance infrastructure.

Read more

-

Potential loopholes in the AI Act could allow use of intrusive tech on ‘national security’ grounds

Both the European Union (EU) and the Council of Europe (COE) negotiations are considering excluding AI systems designed, developed and used for military purposes, matters of national defence and national security from the scope of their final regulatory frameworks. If this indeed happens, we will have a huge regulatory gap regarding such systems.

Read more

-

Global civil society and experts statement: Stop facial recognition surveillance now

198 civil society groups and eminent experts are calling on governments to stop the use of facial recognition surveillance by police, authorities and private companies.

Read more

-

EU lawmakers must regulate the harmful use of tech by law enforcement in the AI Act

115 civil society organisations are calling on EU lawmakers to to regulate the use of AI technology for harmful and discriminatory surveillance by law enforcement, migration authorities and national security forces in the AI Act.

Read more

-

Council of Europe must not water down their human rights standards in convention on AI

In a joint statement, civil society calls for a broad scope and definition of AI systems and no blanket exemptions for AI systems for national defence/national security.

Read more

-

EU legislators must close dangerous loophole and protect human rights in the AI Act

Over 115 civil society organisations are calling on EU legislators to remove a major loophole in the high-risk classification process of the Artificial Intelligence (AI) Act and maintain a high level of protection for people’s rights in the legislation.

Read more

-

All eyes on EU: Will Europe’s AI legislation protect people’s rights?

As the EU’s AI Act moves into the final phase of negotiations, key battles arise for the protection of human rights.

Read more

-

Civil society calls on EU to protect people’s rights in the AI Act ‘trilogue’ negotiations

As EU institutions start decisive meetings on the Artificial Intelligence (AI) Act, a broad civil society coalition is urging them to prioritise people and fundamental rights in this landmark legislation.

Read more

-

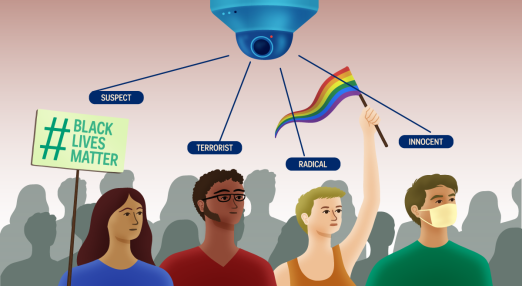

Digital rights for civil society and civil society for digital rights: how surveillance technologies shrink civic spaces

Digital technology has transformed civic spaces - online and offline. In our digital societies, characterised by injustice and power imbalances, technology contributes to shrinking civic spaces. And to defend civic spaces against surveillance, we need strong and resourced civil society organisations and movements.

Read more

-

European Parliament draws red line against biometric surveillance society

On Wednesday 14 June 2023, the European Parliament voted to ban most public mass surveillance uses of biometric systems. This is the biggest achievement to date for the eighty organisations and quarter of a million people who have supported the Reclaim Your Face campaign's demand to end biometric mass surveillance (BMS) in Europe.

Read more

-

EU Parliament calls for ban of public facial recognition, but leaves human rights gaps in final position on AI Act

The final EU Parliament position upholds all of the fundamental rights demands which were added at committee level. Despite efforts to overturn it, the final position also maintains the committees' strong stance against biometric mass surveillance practices. But it is disappointing that the plenary missed the opportunity to increase protections when it comes to empowering people affected by the use of AI and the rights of migrants, refugees and asylum seekers.

Read more