Our work

EDRi is the biggest European network defending rights and freedoms online. We work to to challenge private and state actors who abuse their power to control or manipulate the public. We do so by advocating for robust and enforced laws, informing and mobilising people, promoting a healthy and accountable technology market, and building a movement of organisations and individuals committed to digital rights and freedoms in a connected world.

Filter resources

-

#PrivacyCamp25: Call for Sessions open

Our rights and freedoms – online and offline – are facing unprecedented threats. Recognising this as a collective struggle, we want to explore the theme Resilience and Resistance in Times of Deregulation and Authoritarianism for this edition of Privacy Camp. The 13th edition of Privacy Camp is set to take place on 30 September 2025.

Read more

-

EDRi-gram, 25 June 2025

What has the EDRis network been up to over the past two weeks? Find out the latest digital rights news in our bi-weekly newsletter. In this edition: The case for a spyware ban, EDRi 2025-2030 strategy, EU must reassess Israel’s adequacy status, & more!

Read more

-

Joint civil society response to the Commission’s call for evidence: Impact assessment on data retention by service providers for criminal proceedings

Last week, the EDRi network expressed shared concerns about the introduction of new rules at EU level on the retention of data by service providers for law enforcement purposes.

Read more

-

The EDRi network adopts its 2025-2030 Strategy

The EDRi network adopted its 2025-2030 strategy at the General Assembly in Paris in May 2025. In this blogpost, EDRi’s Executive Director, Claire Fernandez, lays out the year-long journey and the work on many people it took to get us to this important milestone, and some highlights from our objectives and approach moving forward.

Read more

-

Data flows and digital repression: Civil society urges EU to reassess Israel’s adequacy status

On 24 June 2025, EDRi, Access Now and other civil society organisations sent a second letter to the European Commission, urging it to reassess Israel’s data protection adequacy status under the GDPR. The letter outlines six categories of concerns linking Israel’s data practices to escalating human rights violations in Gaza and the West Bank.

Read more

-

OPEN LETTER: The European Commission must act now to defend fundamental rights in Hungary

With Budapest Pride set to take place on June 28, 2025, EDRi and 46 organisation are urging the European Commission to defend fundamental rights in Hungary so that Pride organisers and participants can safely exercise their right to peaceful assembly and freedom of expression.

Read more

-

French Administrative Supreme Court illegitimately buries the debate over internet censorship law

In November 2023, EDRi and members filed a complaint against the French decree implementing the EU regulation addressing the dissemination of 'terrorist content' online. Last week, the French supreme administrative court rejected our arguments and refused to refer the case to the Court of Justice of the European Union.

Read more

-

The EU must stop the digitalisation of the deportation regime and withdraw the new Return Regulation

The European Commission’s new legislative proposal for a deportation regulation fuels detention, criminalisation, and digital surveillance. The #ProtectNotSurveil coalition is demanding the end of the deportation regime and for the Commission to withdraw its proposal.

Read more

-

Spyware and state abuse: The case for an EU-wide ban

EDRi’s position paper addresses the challenges posed by state use of spyware in the EU. It also tackles how spyware should be legally defined in a way that shields us from future harms, as well as the dangers of the proliferation of commercial spyware in Europe. After conducting a values-based analysis into spyware, the paper concludes that the only human-rights compliant approach is a full ban.

Read more

-

EDRi-gram, 12 June 2025

What has the EDRis network been up to over the past two weeks? Find out the latest digital rights news in our bi-weekly newsletter. In this edition: UK data adequacy under scrutiny, ProtectEU strategy a step further towards digital dystopia, and more!

Read more

-

All Eyes on my Period? Period tracking apps and the future of privacy in a post-Roe world

Privacy International investigated eight of the most popular period-tracking apps to analyse how they function and process users’ reproductive health data. Their findings raised concerns for users’ privacy, given the sensitive nature of the health data involved. These findings come within the context of the global roll back on reproductive rights and fears over law enforcement forcing apps to hand over data.

Read more

-

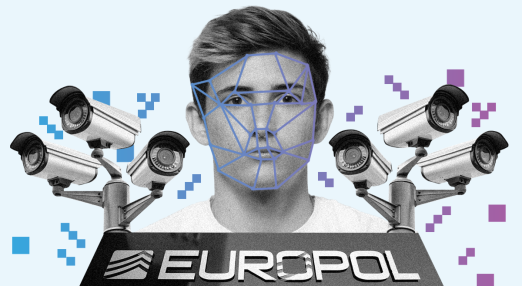

LIBE vote on Europol reform blow to the Commission, but still legitimises an expanding surveillance regime

European Parliament's LIBE committee vote on a reform of the Europol Regulation was a mixed bag. Although it was a blow to the European Commission's original proposal, it still legitimised an expanding surveillance regime thanks to Europol's ever-growing power and resources. Read the Protect Not Surveil coalition’s statement.

Read more