Leaked document: Does the EU Commission really plan to tackle illegal content online?

On 14 September, Politico published a leaked draft of the European Commission’s Communication “Tackling Illegal Content Online”. The Communication contains “guidelines” to tackle illegal content, while remaining coy in key areas. It is expected to be officially published on 28 September.

Introduction

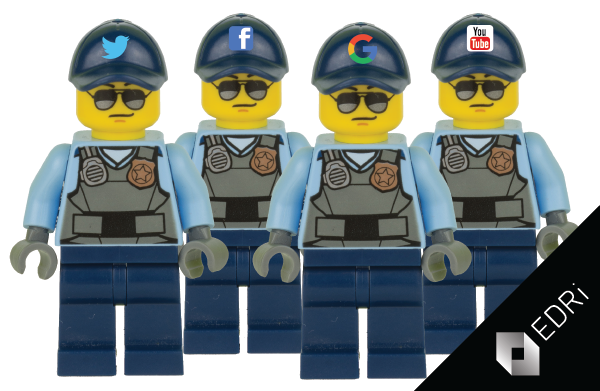

The European Commission’s approach builds on the increasingly loud political voices demanding that somebody does something about bad things online, while carefully avoiding any responsibility for governments to do anything concrete. Indeed, not alone does the introduction barely mention the role of states in fighting criminal activity online, it describes (big) platforms as having a “central role” in society. States can investigate, prosecute and punish crimes, online and offline; internet companies cannot – and should not.

Investigation and prosecution

In line with existing practice, the focus is on headline-grabbing actions by internet companies as THE solution to various forms of criminality online.

Under the Commission-initiated industry “code of conduct” on hate speech, the law is downgraded behind companies’ terms of service. The Communication continues in the same vein.

The scale of the lack of interest in investigating and prosecuting the individuals behind the uploads of terrorist material – or even in whether or not this material is even illegal – is proven by a recent response to a parliamentary question in which the Commission confirmed that the EU Internet Referral Unit “does not keep any statistics of how many of the referrals to Member States led to the opening of an investigation.” Instead, the draft Communication lists some statistics about the speed of removal of possibly illegal content, without a real review mechanism, measures to identify and rectify counterproductive effects on crime-fighting, recommendations on counter-notice systems or any attempts in support of real, accountable transparency.

Duty of care – intermediaries

The draft Communication asserts, with no references or explanation, that platforms have a “duty of care”. It is difficult to work out if the Commission is seeking to assert that a legal “duty of care” exists. Such duties are mentioned in recital 48 of the E-Commerce Directive. However, correspondence (pdf) between the Commission and former Member of the European Parliament (MEP) Charlotte Cederschiöld (EPP) at the time of adoption of the Directive proves conclusively that no such “duties” exist in EU law, beyond the obligations in the articles of the E-Commerce Directive.

Duty of care – national authorities

The draft Communication suggests no diligence for national authorities regarding review processes, record-keeping, assessing counterproductive effects, anti-competitive effects, over-deletion of content, complaints mechanisms for over-deletion or investigation or prosecutions of serious crimes behind, for example, child abuse. Apparently, the crimes in question are not serious enough for Member States to have a duty of care of their own. Instead, they hide behind newspapers’ headlines. The German Justice Ministry indicated, for example, that it had no idea at all about the illegality of 100 000 posts deleted by Facebook nor, if they were illegal, whether any of the posts had been investigated (pdf).

Protecting legal speech, but how?

The draft Communication puts the emphasis on asking companies to proactively search for potentially illegal content, “strongly encouraging” “voluntary”, non-judicial measures for removal of content, and encouraging systems of “trusted flaggers” to report and remove allegedly illegal content more easily. While the European Commission makes reference to the need for adequate safeguards “adapted to the specific type of illegal content concerned”, it fails to suggest any protection or compensation for individuals in cases of removal of legal content, besides a right of appeal or measures against bad-faith notices. The leaked Communication also fails to contemplate any measures to protect challenging speech of the kind the European Court of Human Rights insisted must be protected.

Regulation by algorithm

It is very worrisome that the Commission is encouraging and funding automatic detection technology, particularly when at the same time it recognises that “one-size-fits-all rules on acceptable removal times are unlikely to capture the diversity of contexts in which removals are processed”. It is also worrisome that the leaked Communication claims that “voluntary, proactive measures [do] not automatically lead to the online platform concerned playing an active role”. This means that the Commission believes that actively searching for illegal content does not imply knowledge of any illegal content that exists. Ironically, in the Copyright Directive, the Commission’s position is that any optimisation whatsoever of content (such as automatic indexing) does imply knowledge of the specific copyright status of the content. With regard to automatic detection of possible infringements, the Commission recognises human intervention as “best industry practice”. It refers to human intervention as “important”, without actually recommending it, despite acknowledging that “error rates are high” in relation to some content.

In addition, astonishingly, the draft Communication suggests that we need to avoid making undue efforts to make sure that the (possibly automatic) removals demanded by these non-judicial authorities are correct: “A reasonable balance needs to be struck between ensuring a high quality of notices coming from the trusted flaggers and avoiding excessive levels of administrative burden”, the leaked Communication says.

Points worth keeping in the final draft

To be fair, the draft Communication contains also some positive points: It is welcome that the Commission recognises that…

- big online platforms are not the only actors that are important;

- “the fight against illegal content online must be carried out with proper and robust safeguards balancing all fundamental rights … such as freedom of expression and due process” – even if the draft Communication doesn’t mention who should provide them, what they are or to whom they should be available;

- “a coherent approach to removing illegal content does not exist at present in the EU”;

- the “nature, characteristics and harm” of illegal content is very diverse, leading to “different fundamental rights implications” and that sector-specific solutions should be addressed, where appropriate;

- harmful content “is – in general – not illegal”;

- “the approach to identifying and notifying illegal content should be subject to judicial scrutiny”;

- the possibility of investigation should be facilitated – even if it omits to mention any obligations on transparency with regard to if or how often there are investigations;

- the role of “trusted flaggers” should comply with certain criteria – even if the draft Communication does not mention what that would be – and that they should be auditable, accountable and that abuses must be terminated;

- notices must be “sufficiently precise and adequately substantiated”;

- content-dependent exceptions are foreseen for automatic stay-down procedures, even if the Commission makes unsubstantiated and at least partly false assertions about the effectiveness of such measures;

- transparency reports are encouraged, even though nothing in the draft would resolve the total failure of transparency evident in the implementation of “hate speech code of conducts”;

- counter-notice procedures are important and therefore encourages them;

- filtering technologies have limitation, even if they are not mentioned in all relevant parts of the draft and even if their damaging impact on freedom of expression is not duly addressed.

We can only hope that these important elements remain in the final draft. We participated in expert meetings where we provided a suggested way forward. The Commission knows what is needed. If it is to respect its obligations under the Charter of Fundamental Rights of the European Union, and if it is to avoid the recklessness demonstrated by the lack of review mechanism of, for example, the Internet Referral Units. We will find out if the Commission has the courage to deliver. Further improvements are urgently needed before the final version is published next week.