Artificial Intelligence and Fundamental Rights: Document Pool

Find in this doc pool all EDRi analyses and documents related to Artificial Intelligence (AI) and fundamental rights

In Europe and around the world, AI systems are used to monitor and control us in public spaces, predict our likelihood of future criminality, facilitate violations of the right to claim asylum, predict our emotions and categorise us, and to make crucial decisions that determine our access to public services, welfare, education and employment.

Without strong regulation, companies and governments will continue to use AI systems that exacerbate mass surveillance, structural discrimination, centralised power of large technology companies, unaccountable public decision-making and environmental damage.

Therefore, EDRi calls for the European Union in its upcoming regulation on AI to:

-

Empower affected people by upholding a framework of accountability, transparency, accessibility and redress

This includes requiring fundamental rights impact assessment before deploying high-risk AI systems, registration of high-risk systems in a public database, horizontal and mainstreamed accessibility requirements for all AI systems, a right for lodging complaints when people’s rights are violated by an AI system, and a right to representation and rights to effective remedies.

-

Limit harmful and discriminatory surveillance by national security, law enforcement and migration authorities

When AI systems are used for law enforcement, security and migration control, there is an even greater risk of harm and violations of fundamental rights, especially for already marginalised communities. There need to be clear red lines for such use to prevent harms. This includes bans on all types of remote biometric identification, predictive policing systems, individual risk assessments and predictive analytic systems in migration contexts.

-

Push back on Big Tech lobbying and remove loopholes that undermine the regulation

For the AI Act to be effectively enforced, negotiators need to push back against Big Tech’s lobbying efforts to undermine the regulation. This is especially important when it comes to risk-classification of AI systems. This classification needs to be objective and must not leave room for AI developers to self-determine whether their systems are ‘significant’ enough to be classified as high-risk and require legal scruity. Tech companies, with their profit-making incentives, will always want to under-classify their own AI systems.

1. EDRi analysis

2. EDRi member resources

3. EDRi articles, blogs and press releases

4. Legislative documents

5. Key dates (indicative)

6. Other useful resources

1. EDRi analysis

- Statement: Civil society calls on EU to protect people’s rights in the AI Act ‘trilogue’ negotiations (12.07.2023)

- Open Letter: Civil society calls for the EU AI act to better protect people on the move (06.12.2022)

- Statement: Civil society reacts to European Parliament AI Act draft Report (04.05.2022)

- The EU’s Artificial Intelligence Act: Civil society amendments (03.05.2022)

- The EU AI Act and fundamental rights: Updates on the political process (09.03.2022)

- EDRi and Fair Trials statement: Civil society calls on the EU to ban predictive AI systems in policing and criminal justice in the AI Act (01.03.2022)

- EDRi statement: Civil society calls on the EU to put fundamental rights first in the AI Act (30.11.2021)

- EDRi statement: EDRi and 41 human rights organisations call on the European Parliament to reject amendments to AI and criminal law report (04.10.2021)

- Report: Beyond Debiasing: Regulating AI and its Inequalities (21.09.2021)

- EDRi consultation response: European Commission adoption consultation: Artificial Intelligence Act (03.08.2021)

- EDRi report: The Rise and Rise of Biometric Mass Surveillance in the EU (07.07.2021)

- EDRi statement: EU’s AI law needs major changes to prevent discrimination and mass surveillance (21.04.2021)

- EDRi Consultation response: European Commission consultation on Artificial Intelligence (04.06.2020)

- EDRi Recommendations for a fundamental rights-based Artificial Intelligence Regulation: addressing collective harms, democratic oversight and impermissible use (04.06.2020)

- EDRi Explainer AI and fundamental rights: How AI impacts marginalised groups, justice and equality (04.06.2020)

- EDRi Answering Guide to the European Commission consultation on AI (04.06.2020)

- EDRi paper: Ban Biometric Mass Surveillance (13.05.2020)

- EDRi briefing: Structural racism, Digital Rights and Technology (04.07.2020)

- EDRi Use case research: Impermissable AI and fundamental rights breaches (06.08.2020)

- EDRi paper: Technological Testing Grounds: Migration Management Experiments and Reflections from the Ground Up (09.11.2020)

- EDRi Open letter with 62 human and digital rights organisations calling for red lines on impermissable uses of AI (12.01.2021)

- EDRi Open letter with 56 human and digital rights organisations calling for a ban on biometric mas surveillance practices (01.04.2021)

- EDRi Facial recognition & Biometric Surveillance: Document pool

2. EDRi member resources

- EDRi campaign – Reclaim Your Face: Civil society initiative for a ban on biometric mass surveillance practices

- Access Now, All Out campaign: Computers are binary, people are not: how AI systems undermine LGBTQ identity (06.04.2021)

- Access Now: Human rights in the age of artificial intelligence (2018)

- Access Now: Trust and excellence — the EU is missing the mark again on AI and human rights (11.06.2020)

- Access Now: Facial recognition on trial: emotion and gender “detection” under scrutiny in a court case in Brazil (29.06.2020)

- Access Now: Europe’s Approach to Artificial Intelligence: How AI strategy is Evolving (09.12.2020)

- Access Now: The EU should regulate AI on the basis of rights, not risks (17.02.2021)

- Article 19 & Privacy International: Privacy and Freedom of Expression in the Age of Artificial Intelligence’ (04.2018)

- Article 19: Governance with teeth: How human rights can strengthen FAT and ethics initiatives on artificial intelligence (17.04.2019)

- Article 19: Emotional Entanglement: China’s emotion recognition market and its implications for human rights (01.2021)

- Bits of Freedom: Facial recognition: A convenient and efficient solution, looking for a problem? (29.01.2020)

- Bits of Freedom: Het systeem doet precies wat het wordt opgedragen (29.01.2021)

- Bits of Freedom: We hebben AI-wetgeving nodig waarin onze rechten echt beschermd worden (01.04.2021)

- Chaos Computer Club: The Ghost in the Machine: An Artificial Intelligence Perspective on the Soul (video)

- Free Software Foundation Europe: Artificial intelligence as Free Software with Vincent Lequertier (podcast) (25.09.2020)

- Hermes Center: Personalisation algorithms and elections: breaking free of the filter bubble (07.02.2019)

- Homo Digitalis: Artificial intelligence in the courts: Myths and reality (31.12.2018)

- Homo Digitalis: Participation in the public consultation on the White Paper on Artificial Intelligence (14.06.2020)

- Noyb: Clearview AI’s biometric photo database deemed illegal in the EU, but only partial deletion ordered (28.01.2021)

- Panoptykon Foundation: Black-Boxed Politics (17.02.2020)

- Panoptykon Foundation: Submission to the consultation on the ‘White Paper on Artificial Intelligence (10.06.2020)

- Privacy International: Profiling and Automated Decision Making: Is Artificial Intelligence Violating Your Right to Privacy? (05.12.2020)

- Privacy International: The Identity Gatekeepers and the Future of Digital Identity (07.10.2020)

- Statewatch: Police seeking new technologies as Europol’s “Innovation Lab” takes shape (18.11.2020)

- Statewatch: “Artificial intelligence” could be used to screen and profile travellers to the EU (26.02.2021)

- Access Now: The EU AI Act: How to (truly) protect people on the move (13.05.2022)

- ECNL: New poll exposes public fears over the use of AI by governments in national security (30.11.2022)

- Amnesty International: The EU must respect human rights of migrants in the AI Act (17.05.2023)

3. EDRi articles, blogs and press releases

- Thomptson Reuters: AI excellence, trust and ethics… But what about rights? (06.04.2020)

- EDRi blog: Can the EU make AI “trustworthy”? No – but they can make it just (04.06.2020)

- EU Scream: Data & Dystopia podcast (16.06.2020)

- Euractiv: US Corporations are talking about bans for AI. Will the EU? (19.06.2020)

- Euractiv: Technology has codified structural racism – will the EU tackle racist tech? (03.09.2020)

- EDRi blog: Down with (discriminating) systems (10.09.2020)

- EDRi blog: EDRi & Access Now – Attention EU regulators: We need more than ethics to keep us safe (21.10.2020)

- EDRi blog: Bits of Freedom – We want more than “symbolic gestures in response to discriminatory algorithms”

- EDRi blog: Chaos Computer Club and noyb – How to reclaim your face from Clearview AI (10.02.2021)

- Euronews: Mass facial recognition is the apparatus of police states and must be regulated (17.02.2021)

- EDRi blog: 116 MEPs agree – we need AI red lines to put people over profit

- European Parliament Magazine: This is the EU’s chance to stop racism in artificial intelligence (16.03.2021)

- Euobserver: Why EU needs to be wary that AI will increase racial profiling (19.04.2021)

- Euronews: EU’s new artificial intelligence law risk enabling Orwellian surveillance states (22.04.2021)

- EDRi: From ‘trustworthy AI’ to curtailing harmful uses: EDRi’s impact on the proposed EU AI Act (01.06.2021)

- EDRi: EU privacy regulators and Parliament demand AI and biometrics red lines (14.07.2021)

- EDRi: Celebrating a strong European Parliament stance on AI in law enforcement (06.10.2021)

- EDRi press release: The European Parliament must go further to empower people in the AI act (21.04.2022)

- EDRi blog: Regulating Migration Tech: How the EU’s AI Act can better protect people on the move (09.05.2022)

- Thomson Reuters: OPINION: The AI Act: EU’s chance to regulate harmful border technologies (17.05.2022)

- EDRi: #ProtectNotSurveil: EU must ban AI uses against people on the move (09.02.2023)

- EDRi: Civil society urges European Parliament to protect people’s rights in the AI Act (19.04.2023)

- Euronews: As AI Act vote nears, the EU needs to draw a red line on racist surveillance (25.04.2023)

- EDRi press release: EU Parliament sends a global message to protect human rights from AI (11.05.2023)

- EDRi press release: EU Parliament calls for ban of public facial recognition, but leaves human rights gaps in final position on AI Act (14.06.2023)

- Euronews: All eyes on EU: Will Europe’s AI legislation protect people’s rights? (17.07.2023)

4. Legislative documents

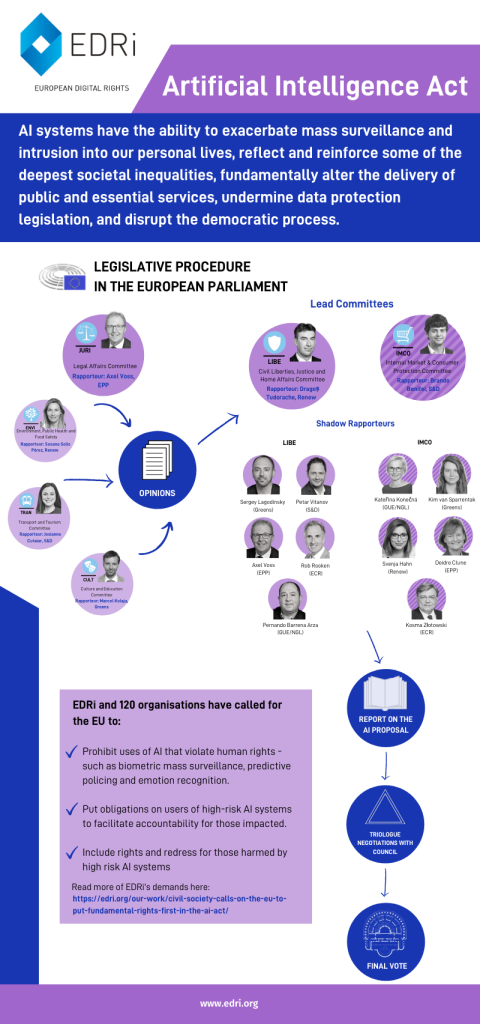

To expand the infographic, click once on it.

European Commission

- Communication: Building Trust in Human Centric Artificial Intelligence (08.04.2019)

- White Paper on Artificial Intelligence – a European approach to excellence and trust (19.02.2020)

- Consultation: White Paper on Artificial Intelligence (17.07.2020)

- European Commission Artificial Intelligence Inception Impact Assessment (21.04.2021)

- Commission: Regulation on a European Approach for Artificial Intelligence (Leaked)

- European Commission: Proposal for a Regulation laying down harmonised rules on artificial intelligence (21.04.2021)

European Parliament

- European Parliament, Resolution on a civil liability regime for artificial intelligence, (2020/2014(INL))

- European Parliament, Resolution on a framework of ethical aspects of artificial intelligence, robotics and related technologies (2020/2012(INL))

- European Parliament, Resolution on intellectual property rights for the development of artificial intelligence technologies (2020/2015(INI))

- European Parliament, Report on Artificial intelligence in education, culture and the audiovisual sector (2020/2017(INI)

- European Parliament Artificial intelligence in criminal law and its use by the police and judicial authorities in criminal matters (2020/2016(INI)) – forthcoming

- European Parliament, Text adopted – plenary (P9_TA(2023)0236 (14.06.2023)

Council of the European Union

Studies:

- European Parliament,EPRS, Selection of publications on Artificial intelligence, January 2021

- European Parliament, EPRS, An EU framework for artificial intelligence, October 2020.

- European Parliament, EPRS, Civil liability regime for artificial intelligence, September 2020

- European Parliament, EPRS, European framework on ethical aspects of artificial intelligence, robotics and related technologies, September 2020

5. Key dates (indicative)

- Trilogue dates: 18 July, 03 October, & 26 October 2023

- EU Parliament plenary vote – 14 June 2023

- IMCO-LIBE Parliament committee vote – 11 May 2023

- Publication of White Paper on Artificial Intelligence – 19 February 2020

- Closing of the consultation on Artificial Intelligence – 14 June 2020

- Launch of European Commission legislative proposal on artificial intelligence – 21 April 2021

6. Key policymakers

Artificial Intelligence Act (AIA) (IMCO – lead committee)

- Rapporteur: Brando BENIFEI (S&D), Dragoș Tudorache (Renew)

- Shadow Rapporteurs: Deirdre Clune (EPP); Svenja Hahn (Renew); Kim VAN SPARRENTAK (Greens/ EFA); Kateřina KONEČNÁ (The Left)

7. Other useful resources

- AI Now Institute: Submission to the European Commission on“White Paper on AI – A European Approach” (14.06.2020)

- Algorithm Watch: Response to the European Commission’s consultation on AI (06.2020)

- Fair Trials: Regulating Artificial Intelligence for Use in Criminal Justice Systems in the EU – Policy Paper (06.2020)

- Amnesty International: What is the Gangs Matrix? (18.05.2020)

- Amnesty International: We Sense Trouble: Automated Discrimination and Mass Surveillance in Predictive Policing in the Netherlands (30.09.2020)

- Apps Drivers and Couriers Union: Gig economy workers score historic digital rights victory against Uber and Ola Cabs (12.03.2021)

- Democratic Society: Fear no more: Talking AI (02.10.2020)

- European Disability Forum: Plug and Pray – A disability perspective on artificial intelligence, automated decision-making and emerging technologies (15.12.2020)

- European Network Against Racism: Data Driven Policing – The hardwiring of discriminatory policing practices across Europe (11.2019)

- Human rights Watch: EU Should Regulate Artificial Intelligence to Protect Rights(14.01.2021)

- Politico: Europe’s artificial intelligence blindspot: Race (16.03.2021)

- Human Rights Watch: How the EU’s Flawed Artificial Intelligence Regulation Endangers the Social Safety Net: Questions and Answers (10.11.2021)

This document was last updated on 9 August 2023